| Release Date | Version |

|---|---|

| 04/28/2025 | 1.2.2-25000 |

- What's New in Superna Data Orchestration Release 1.2.2

- New in this release:

- Usability Enhancement:

- Bug Fixes:

- Updating Java dependencies

- What's New in Superna Data Orchestration Release 1.2.1

- New in this release:

- SupernaOne Integration

- Feature Enhancement

- Bug Fixes

- Archive Engine Bug Fixes

- Usability Enhancement

- CBDM Feature Enhancement

- CBDM Bug Fixes

- What’s New in Superna Eyeglass Golden Copy Release 1.1.22

- New in Superna Eyeglass Golden Copy Release 1.1.21 (12/14/2023)

- New in 1.1.21

- Usability Enhancements

- Feature Enhancements

- Better user job monitoring with activefiles summary integrated to job view small and large files

- Job view changelist/layers enhancement

- Fixed in 1.1.21

- T14560 Cloud Browser Access with Macos

- T17893 searchctl archivedfolders history incorrectly shows recall job as job type FULL

- T19415 Recall stats incorrectly show errors for folder object which store ACLs

- T20500 'jobs vew' for recall job not incrementing meta data related stats

- T21778 Issues with Export for Recall job with meta-data

- GC-574 Recall stats are empty when the --older-than and --new-than options are used

- T19012 Recall of files from Azure fails for files uploaded with Golden Copy earlier than 1.1.4-21050

- T23315 Recall --older-than option creates folder structure when there is no matching objects

- T23035 Jobs view does not track delete from source progress

- New in Cloud Browser for Data Mobility 1.1.21

- New in 1.1.21

- Feature Enhancements

- Concurrent Cloud Mover Jobs

- Fixed in 1.1.21

- CBDM-390 Cloud to Premise - Run Copy/move job with unchecked the "Email on job completion" , then should not get an email for that job.

- CBDM-171 CBDM Browser - List limited to 1000 objects and possibly blocking access to lower 'paths'

- GC-2410 Recall - Errors from DENY (to download) bucket policy being counted as Skips

- CBDM-753 Pinned archiveworker nodes intermittently not assigned to Kafka consumer group `archiveworker`

- CBDM-761 Round-robin and Pinned archiveworkers behaviour in parallel jobs

- CBDM-407 Parallel CBDM jobs stats seems to be incorrect

- New in Superna Eyeglass Golden Copy Release 1.1.20(10.05.2023)

- New in 1.1.20

- Usability Enhancements

- Feature Enhancements

- Fixed in 1.1.20

- GC-2057 Job marked as a FAILURE due to Snapshot Extend step

- GC-2614 Archivedfolder Checksum Value Reverting to Default(Off) After Upgrade

- GC-2409 UI - Recall gauges - Skips counting against success %

- New in Cloud Browser for Data Mobility 1.1.20(10.05.2023)

- New in 1.1.20

- Feature Enhancements

- New in Superna Eyeglass Golden Copy Release 1.1.19(09/01/2023)

- New in 1.1.19 -23189

- Usability Enhancements

- Feature Enhancements

- Fixed in 1.1.19-23189

- GC-2404 Archivedfolders checksum setting resets to Default (Off) after a VM restart

- GC-2405 UI - Archivedfolders Tab - Edit not populating `Tier`, which seems to be a Requirement

- GC-1897 Pipeline-Recall Does not skip files with the skip existent export

- GC-2035 Job Cancel - Job should not be a SUCCESS if cancelled at any step

- New in Cloud Browser for data Mobility 1.1.19-23189 (09/01/2023)

- Feature Enhancements

- Fixed in 1.1.19 Cloud Browser for data Mobility 1.1.19-23189

- CBDM-528 Email - SMB share path uses an Incorrect folder separator for the selected sub-folders

- CBDM-532 Email Needs Attention Causing Job Failure due to Unconfigured SMTP Server

- CBDM-609 CBDM Browser - Buckets region being labelled incorrectly if there are other regions

- CBDM-615 Cloud to Premise - Submit SMB Target Button is obscured probably when the path is too long

- CBDM-614 Cloud Browser - Unable to browse buckets in regions other than the one specified in Credentials

- CBDM-591 Skipping incremented from failing to move files to SMB due to the limit

- Supported OneFS releases

- New in Superna Eyeglass Golden Copy Release 1.1.17 (07/27/2023)

- Fixed in 1.1.17

- GC-1774 Json and Html report of recall/pipeline recall job is not showing accurate results for skipped files.

- GC-2254 upgrade from 1.1.9 - after upgrading, the running job is not continuing to run

- GC-1687 Can't add AD user to admin for GC, when username contains few special characters.

- GC-2399 Grafana - All Time Gauges - Negative % number

- GC-2237 Issue with login when there are spaces in the AD user name

- GC-1994 Pre Win2k usernames able to be added to Admin but fail at login

- Fixed in 1.1.17 Cloud Browser for data Mobility 1.1.17

- CBDM-412 Cloud to Premise - Parallel Jobs - Issues including Objects incorrectly Moved into a single SMB path

- CBDM-428 CBDM - files are recalled on wrong path instead of `ifs/fromcloud/CBDM` its recalling on `ifs/fromcloud/BDM`

- What’s New in Superna Eyeglass Golden Copy Release 1.1.16

- Fixed in 1.1.16

- GC-1879 Auto ReRun - Add Stats for job `ReRun - Folders` and Stats Issues

- GC-1905 Pipeline Recall with `--trash-after-recall` does not delete original files

- GC-1697 UI - Editing archivedfolders after the first Edit does not close the Edit box - Changes applied

- GC-1748 ReRun of Incremental Rename does not work because missing info on previous name

- New in Superna Eyeglass Golden Copy Release 1.1.15 (05/03/2023)

- Fixed in 1.1.15

- GC-1525 UI-Language change function is not working

- CBDM-235 Cloud to Premise - Jobs History - Skipped bytes added to Success bytes

- CBDM-272 Job is marked as done without Recall if the Email is unchecked

- GC-1987 UI - Missing Edit and Add archivedfolders

- GC-1205 Backup and recover functionality-Not able to fully recover the system

- GC-1880 When Auto-ReRun is triggered it can use the wrong job data (of another archivedfolders)

- GC-1749 Longpath - Incremental Rename did not update the JSON content - Recall downloaded old name

- New in Superna Eyeglass Golden Copy Release 1.1.14 (04/04/2023)

- Fixed in 1.1.14

- GC-1750 Longpath- Incremental Modify (Content) did not update the inode for Hardlink

- CBDM-234 Cloud to Premise - Jobs History and Running - Errors not counted

- CBDM-262 Local admin is seeing a bucket without adding Credential

- GC-1700 Remove suffix of downloaded files

- New in 1.1.14

- Version Based Recall

- Usability Enhancements

- Cloud Browser for Data Mobility 1.1.14

- Usability Enhancements CBDM

- New in Superna Eyeglass Golden Copy Release 1.1.13 (03/06/2023)

- Fixed in 1.1.13 Release

- GC-1510 Non-admin is able to see all archivedfolders

- CBDM-213 Cloud to Premise - Jobs data for Running and History should show Target SMB

- CBDM-190 Cloud to Premise - Keep objects with the same names during Staging

- GC-970 Long Path Support - Rename fails possibly due to S3 Error that key is too long

- New in 1.1.13-23045

- Supported Versions of S3 Targets

- Supported S3 Protocol Targets

- End of Life Notifications

- Deprecation Notifications

- Azure default Tier change

- HTML Summary Report to be deprecated

- Support Removed / Deprecated in this Release

- Recall the "metadata" option

- New in Superna Eyeglass Golden Copy Release 1.1.12 (02/03/2023)

- Fixed in 1.1.12 release:

- GC-1078 UI - Pause and Start buttons have no action

- GC-1513 Golden Copy special character regression for ECS, AWS

- GC-1070 job id is lost after upgrade to 1.1.11 so no jobs running/jobs view/job history and folder stats

- GC-1069 Multi part files recall issue - Recall files much larger than the original

- The system view tab works

- New in Superna Eyeglass Golden Copy Release 1.1.11 (09/27/2022)

- New in 1.1.11

- GC-2 Treewalk Robustness

- GC-138 Refresh GUI pages

- GC-337 Custom Metadata from application-specific files

- GC-341 Keep full history of file and object in objects as they're moved to the cloud

- GC-373 Bugs

- GC-586 Download file(s) to User pc from cloud browser

- GC-762 Cloud file download from GCS for all file types

- Fixed in 1.1.11

- T23283/GC-396 Golden Copy GUI fully restricted for non-admin user

- GC-562/563 Jobs view for large file is not accurate

- Not available in 1.1.11

- T17181 Archive to AWS Snowball

- T17195 Upload to Azure, Cohesity, ECS or Ceph via Proxy

- T16247 DR cluster alias / redirected recall

- Technical Advisories

- Known Issues

- Known Issues Data Orchestration

- DO-1050 Some Grafana stats missing after upgrade to 1.2.2-25000

- DO-1048 No error shown for Non-Existent Subfolder in Recall Operation

- Known Issues Archiving

- T16629 Azure upload error where name contains %

- T18979 Incremental Archive issues for files with Zone.Identifier suffix

- T19218 Setting to enable delete for Incremental archive not working

- T19441/GC-543 Move/Delete operation in a single incremental sync orphans data in S3 target

- T21370 No message when snapshot for upload from snapshot is not in Golden Copy inventory

- T21745 s3update with a large number of changes may incorrectly delete folder objects with ACL information

- T22925 Cannot use existing folder to copy from DR cluster

- T23126 Issues with full and incremental jobs for folders when S3 prefix is used

- GC-675 Archive job does not finish when tree walk fails at the beginning

- General Reporting Issues

- T17647 After restart stats, jobs view, export report, cloud stats not consistent

- T17932 searchctl jobs view or folder stats may be missing reporting on small percentage of files uploaded.

- T19136 Jobs View / Export Report do not correctly calculate job run time if job is interrupted

- T19466 Statistics may show more than 100% archived/attempted after a cluster down/up

- T21186 Statistics may not be accurate when there is a rename operation

- T21257 The 'file change count' column in jobs running always shows 0 for incremental archive

- T21572 Export report for folder with multiple jobs may only produce 1 report

- T21668 Copy from flat file does not report errors

- T22523 Export command error json files may be missing files

- T23450 Jobs Summary email report always shows running job

- GC-385 jobs view and stats view incorrect for paused job that is resumed after a cluster down/up

- GC-383 Pipeline recall stats may be incorrect

- Recall Reporting Issues

- T16960 Rerun recall job overwrites export report

- T18535 Recall reporting issue for accepted file count / interrupted recall

- T18875/T21574 Recall stats may incorrectly show errored count

- T21561/T21577 Recall stats issues

- Known Issues Recall

- T16129 Recall from Cohesity may fail where folder or file contain special characters

- T16550 Empty folder is recalled as a file for GCS

- T18338 Recall Rate Limit

- T18428 Recall for target with S3-prefix result in extra S3 API call per folder

- T18600 Recall Job where recall path is mounted does not indicate error

- T19438 Files may not be recalled

- T19649 Meta-data not recalled where AD user/group cannot be resolved

- T21626 Recall Job for large number of files may get stuck

- T23312 Recall --start-time and --end-time options do not recall any data

- GC-652 Recall - Stats issue with multipart files

- GC-2378 Pipeline Recall and CBDM - job view is intermittently showing `0` recalled Bytes

- Known Issues Media Mode

- T23160 Failed link archive are not recorded in stats

- T22022 Error on incremental for link object with suffix

- T22488 Object storage still contains file after all hard links and original file have been deleted

- T22484 Deleting original hard link file may result in multiple copies of the file in object storage

- Known Issues Pipeline

- TT22539 Move to Trash / Delete configuration is not working

- Known Issues Golden Copy GUI

- T23150 Error on adding or editing archivefolder has no details

- T23076 No validations when editing folder from Golden Copy GUI

- T22851/T23145 Golden Copy GUI Folder Management GUI Issues

- T23002 Golden Copy Cloud Browser filters not displayed

- T22385 Golden Copy GUI shows Search product on some pages

- GC-607 Cloud Browser - support self signed certificates on local targets

- Known Issues General & Administration

- T16640 searchctl schedules uses UTC time

- T21073 Phone home may fail when archive folder path contains characters 'id'

- T22913 Licensing of Powerscale can be lost

- T23382 Verbose archived folders list shows properties no longer used

- GC-1119 some file uploads get errored during the upgrade.

- GC-2233 GoldenCopy - Running a second job with skips enabled causes the job not to complete

- GC-2387 Incremental - Count Accepted is different than Archived possibly due File_Rename

- GC-2692 Large Changelists Max Out Isilongateway RAM

- DO-425 Grafana data not collected for CPU and memory usage

- DO-424 The CBDM Move job got Stuck, didn't complete

- DO-431 Archiveworker Restarting During Large File Processing on GCS

- Known Limitations

- T15251 Upload from snapshot requires snapshot to be in Golden Copy Inventory

- T15752 Cancel Job does not clear cached files for processing

- T16429 Golden Copy Archiving rate fluctuates

- T16628 Upgrade to 1.1.3 may result in second copy of files uploaded for Azure

- HTML report cannot be exported twice for the same job

- T16250 AWS accelerated mode is not supported

- T16646 Golden Copy Job status

- T17173 Debug logging disk space management

- T18640 searchctl archivedfolders errors supported output limit

- T19305 Queued jobs are not managed

- T20379 Canceled archive job continues upload

- GC-1504 Parallel jobs limited to 10 at a time

- GC-1527 Cloud Browser - Single File Download - Support for downloading symlinks that points to a hardlink

- GC-1625 Incrementel Delete not working for deleted Symlink possibly due to suffix

- GC-2789 3 Nodes : Folder stats empty `searchctl stats view --folder <folderID>` and `searchctl archivedfolder stats --id <folderID>`

- GC-2804 Phonehome Logs Upload Issue on HyperV OVF 3-Node

- GC-2450 Backup and Restore Kafka topic's content

- Fast Incremental Known Limitations

- Move/Rename identification and management in object storage known limitations

- T20868 Cannot run incremental update for same folder to multiple targets

- T21258 Version based recall uses UTC time for inputs

- T21759 Unable to export recall job multiple times

- T21925 Export reporting limit

- T22680 Media Mode Hardlink mode may copy more than 1 copy of the file

- T22469 Media mode does not copy broken symbolic links

- T22630 Media mode may orphan hardlink objects when all Golden Copy nodes are not able to archive

- T23130 Media mode has no stats for broken symlink

- GC-433 PowerScale may return success for recalled file even if PowerScale blocks saving the file

- GC-459 Recall error for multi-part file leaves incomplete file on file system

- GC-450 Golden Copy - Traceback on cluster up

- GC-446 Stats conversion from bytes incorrect

- GC-874 Audit - Job is stalled after hitting exactly 50k files

- GC-938 Incremental - Only the original hardlink is updated possibly due to changelist limitation

- GC-1020 Cloud Browser - Unable to download file from Wasabi

- GC-1308 Increase/Customize/Remove the 1000 error limit

- Cloud Browser for Data Mobility

- Known Issues Cloud Browser for Data Mobility

- CBDM-168 Wasabi - Unable to add archivedfolders from CLI without using https://

- CBDM-512 CSV Export - Special characters probably causing the columns not being populated correctly

- CBDM-322 Cloud to Premise - Recall phase is not skipping existing files

- CBDM-502 Cloud to Premise - the staged files should be delete from the bucket even without the recyclebucket

- CBDM-503 Unable to modify to remove recyclebucket from the folder

- CBDM-383 Cloud to Premise - Stats differences between Staging and Recall

- CBDM-227 Block adding email recipient if no channel

- CBDM-692 CBDM Job Fails When Selecting Entire Bucket

- CBDM-693 Staging Path Not Cleared on Skipped Job: Pending Issue Resolution

- CBDM-744 1/4 parallel jobs failed at Staging due to trying to create `-CBDM` topics

- Known Limitations Cloud Browser for Data Mobility

- CBDM-543 Cloud to Premise - Stats for Move step

- CBDM-631 Parallel Job - Error `Fail to lock` when the same user downloads the same data into the same Target

- CBDM-777 Staging cannot use s3 keys that are over the AWS S3 key limit (1024 bytes)

- GC-412 Archive job does not handle a block "b" special file

What's New in Superna Data Orchestration Release 1.2.2

New in this release:

Usability Enhancement:

Ensuring all licenses are successfully removed after executing an uninstall command on all licenses (DO-1038)

Bug Fixes:

Fixed SMTP not functioning issue (DO-1033)

Fixed issue with Redis class definition not found issue - causing job failure when Redis is enabled (DO-741)

Updating Java dependencies

Java dependencies clean up, removing redundant imports on Java libraries.

What's New in Superna Data Orchestration Release 1.2.1

New in this release:

SupernaOne Integration

Added integration to SupernaOne

Sending GC metrics to SupernaOne such as bytes archived, files archived

- Registering GC appliances to SupernaOne, so they can be visualize in SupernaOne dashboard

Feature Enhancement

- Added new row in job stats for hardlink recalls, to show total sizes of files accepted/recalled with hardlinks included, and new row showing size of files accepted/recalled that are non-hardlinks.

- Added new license view, now showing remaining capacity for license, if positive the number will be shown as green

- Tracking regular GC licenses’ capacity limit

Bug Fixes

Fixed bug where pipeline recall fails during rerun job (DO-152)

Fixed single node having errors about multiple zk node errors(DO-156)

Fixed issue where some job history is missing after upgrade(DO-59)

Fixed issue where scheduled incremental jobs are cancelled until a full job is run(DO-53)

Fixed issue where trash-after-recall option does not move files to recycle bucket(DO-161)

Fixed issue where log parser log showing duplicates in job history(DO-165)

Fixed bug where file archived does not match files accepted in job stats due to file renaming(DO-109)

Fixed missing stats during archive jobs(DO-178)

Fixed stalled job issue when processing large number of files(DO-418)

Fixed bug where files with special character failed to upload for azure jobs (DO-230)

Archive Engine Bug Fixes

Fixed issue allow editing of GCS folders (DO-242)

Fixed issue where Pipline config wizard sometimes does not close after folder is added(DO-511)

Fixed bug where cancelled dry run job causes kafka topics lag, and error messages filling up the indexworker log (DO-229)

Fixed issue where setting –customMetaData to true causes error in archiveworker log (DO-233)

Fixed Archive Engine config wizard UI issue during editing GCS folder(DO-243)

editing GCS folder to add recall folder, folder ID field becomes empty and Archive Engine Type becomes recall

Removing tier field for ECS type folders in pipeline config. (DO-237)

Fixed issue where empty collision fgolders are created for GCS jobs (DO-238)

Fixed policy file lost during archive job issue (DO-244)

Fixed null exception when navigatin to Archive Engine or CBDM page (DO-231)

Fixed issue for GCAE Archive byte tracking files not created, resulting in License manager container restart (DO-84)

Fixed wrong methods used for staging and recall issue. (DO-239)

Fixed UI issue where the tables do not align properly (DO-234)

Fixed job status issue where the jobs displays as success even though the job has status “Needs attention” (DO-235)

Fixed issue where AE folders cannot be updated when the folder type is recall

Fixed stats issue with recall. (DO-330)

Fixed issue where GUI does not show the error count for restore jobs (DO-329)

Usability Enhancement

Showing better error message for unreachable isilon cluster

- Error changed from generic error(unknown error has occurred) to “could not connect to isilon cluster”

Suppressing errors that have no impact such as creating folders that already exist during cluster up.

Fixing null pointer error when adding license and more user-friendly message after adding license

searchctl settings config export/importconfig to backup and restore GC settings such as admins list and email notifications settings. Email channel passwords are now encrypted in the back up and setting file.

Correctly measure the custom metadata limit for AWS S3, add metadata before custom metadata, update limit for Azure. The ARCHIVE_LARGE_METADATA flag used to create a new file when a field exceeds 8192kb limit, Now it creates a new file when the entire HTTP request to send the file exceeds 8192kb. user gets following warning if flag (ARCHIVE_LARGE_METADATA) is not set

ARCHIVE_NETWORK_RATE_LIMIT_MB environment variable now limits the archive rate, and the limited rate is more accurate

Added special character support for folder names (DO-240)

Added buttons for dry run for quick setup path dry run for Archive Engine page(DO-236)

Added support for Azure Archvie storage tier (DO-319)

Added check in azure get mapper to ignore objects in archive tier.(DO-303)

Added restore indicator for Azure Archive tier(DO-310)

Added check for data in restore process to see if data is already staged for recall (DO-307)

Include policy job stats in Active Jobs GUI (DO-340)

Added GUI for policy creation. (DO-339)

Check policy path when adding policy to folder with smart-archiver-recall-folder flag set. (DO-341)

CBDM Feature Enhancement

Archivedfolders list --verbose command now shows a new row for archiveworker nodes information

When running CBDM jobs we use round-robin to select pipelines for jobs, now we will skip busy pipelines that are next in rotation it there are pipelines that become free

Added sorting by date and timestamp capabilities to CBDM tables

CBDM Bug Fixes

Fixed bug where “archivedfolders modify” cannot modify “archiveworker-nodes” property(DO-225)

Fixed bug when CBDM jobs is stalled during copying (DO-223)

Fixed issue where CBDM folder cannot be updated from GUI (DO-458)

Fixed CBDM page UI issue where the table is showing at a wrong region and does not align with the designed layout. (DO-491)

What’s New in Superna Eyeglass Golden Copy Release 1.1.22

New in Superna Eyeglass Golden Copy Release 1.1.21 (12/14/2023)

New in 1.1.21

Usability Enhancements

Job view output now displays:

- Folders accepted

- Folders succeeded

- Folders failed

Feature Enhancements

Job error log and archiveworker logging to include the S3 request ID's.

Google (GCS) was added for Admin and Non-Admin users.

Better user job monitoring with activefiles summary integrated to job view small and large files

Active copies of GUI are now showing for each node NODE or CLUSTER:

The total # of files being copied

Total small file count and the % of the total this represents

Total large file count and the % of the total this represents

Show the sum of all files in active copies for ALL nodes in TBs

Show a table with the following break-down:

files 1 byte - 100K

100K - 1MB

1MB - 5MB

5MB - 100MB

100MB - 1G

1G - 100G

500G - 1TB

Greater than 1TB

Job view changelist/layers enhancement

Job view now includes these changelist details for incremental:

The layer being processed

How many layers

The layer size

The # of files per layer

Total amount of files in the changelist

Fixed in 1.1.21

T14560 Cloud Browser Access with Macos

Certificate handling in Macos can impact the cloud browser functionality

Work around: Use Windows OS with supported browsers

T17893 searchctl archivedfolders history incorrectly shows recall job as job type FULL

The output from the searchctl archivedfolders history command will incorrectly show a recall job as job type FULL.

Workaround: searchctl jobs history correctly shows the job type as GoldenCopy Recall.

T19415 Recall stats incorrectly show errors for folder object which store ACLs

Recall stats and error command incorrectly show errors related to meta data recall for the folder objects created to store folder ACLs.

Workaround: None required. These are not errors associated with the actual folder ACLs. These can be identified in the error command as the Metadata apply failed error will be listed against the folder name where folder has been prefixed with the PowerScale cluster name.

T20500 'jobs vew' for recall job not incrementing meta data related stats

The searchctl jobs view command may incorrectly show 0 for metadata related stats.

Workaround: Use the folder stats to see cumulative stats for folder metadata recall.

T21778 Issues with Export for Recall job with meta-data

- The report incorrectly shows the Job Type as FULL and the report itself is located in the ./full folder.

- Accepted stats are incorrect

- snapshot path is incorrect

Recall jobs without metadata export as expected.

Workaround: Export report can be retrieved from the ./full folder but some report contents are incorrect as above.

GC-574 Recall stats are empty when the --older-than and --new-than options are used

A recall job using the --older-than or --newer-than options does not show any stats in the stats view. No impact to the recall job itself.

Workaround: Use jobs view to see the count and bytes archived.

T19012 Recall of files from Azure fails for files uploaded with Golden Copy earlier than 1.1.4-21050

Files that were uploaded to Azure with Golden Copy build prior to 1.1.4-21050 cannot be recalled back to PowerScale using Golden Copy.

Workaround: Native S3 tools can be used to recall files from Azure.

T23315 Recall --older-than option creates folder structure when there is no matching objects

Recall using the --older-than option where the input is not in the range of any object timestamp, no files are recalled as expected but the folder structure is created on recall and jobs view stats incorrectly show all files are recalled.

Workaround: None available.

T23035 Jobs view does not track delete from source progress

The jobs view command does not show any progress status for the delete operation when a folder is configured with --delete-from-source.

Workaround: The stats view command FULL/FILES_DELETE_FROM_SOURCE can be used

New in Cloud Browser for Data Mobility 1.1.21

New in 1.1.21

Feature Enhancements

After all files have been moved to SMB shares, the Email notification will be sent.

Concurrent Cloud Mover Jobs

Round-robin archived folders. Now, the user can manually create two or more archivedfolders (pipelines) that can be used for recall and have the user jobs select archivedfolders on a round-robin basis so that the user can support multiple concurrent download jobs (by the same user or different users) at the same time.

It’s possible now manually assign some archivedfolders to specific archivedfolders so that I can separate the archiveworker resources acting on each folder.

Fixed in 1.1.21

CBDM-390 Cloud to Premise - Run Copy/move job with unchecked the "Email on job completion" , then should not get an email for that job.

When running Copy/move CBDM job with unchecked the "Email on job completion", even I received an email for that job. Unchecked the "Email on job completion" for Move/Copy CBDM jobs. After the job is done, Emails are sent anyway. The expected result is if we will run copy/move job with unchecked "Email on job completion", then we should not get an email for that job.

CBDM-171 CBDM Browser - List limited to 1000 objects and possibly blocking access to lower 'paths'

GC-2410 Recall - Errors from DENY (to download) bucket policy being counted as Skips

A file failed to download due to a bucket policy that denies the download of certain file types. The following error is counted as a Skip, which looks to be that all indexworker-related errors on the object will be counted as a Skip. Impact: Other than the skips, no errors are recorded.

CBDM-753 Pinned archiveworker nodes intermittently not assigned to Kafka consumer group `archiveworker`

Pinned archiveworker nodes intermittently not assigned to Kafka consumer group `archiveworker`.

Workaround: Restarting archiveworker/cluster down and up.

CBDM-761 Round-robin and Pinned archiveworkers behaviour in parallel jobs

When there are more jobs than pipelines, multiple jobs will share the same pipeline. Also, all the parallel jobs sharing a pipeline will need to finish their Copy/Move job steps before they can be marked as finished. Note that email notifications also share this behaviour.

Workaround: Add more pipelines to avoid parallel jobs sharing the same pipeline.

CBDM-407 Parallel CBDM jobs stats seems to be incorrect

All parallel jobs or all jobs that happen to be running when others are running will finish at the same time regardless on the amount of data being copied/moved. This applies to multiple jobs run by the same user or multiple users.

New in Superna Eyeglass Golden Copy Release 1.1.20(10.05.2023)

New in 1.1.20

Usability Enhancements

The Cancel Job button was added for active running jobs. The user can now easily cancel an active running job with the click of a button.

The job status NEEDS ATTENTION shows more status and reasons. Now, the job statuses will include three distinct states: "Success," "Failure," and the new "Needs Attention". The reasons - ‘High Error Rate’, ‘Low Error Rate’, ‘Treewalk Errors’

Disk space display script simplifies monitoring disk space usage on users’ appliances. This script allows users to effortlessly check the disk space status of their relevant volumes, enabling them to troubleshoot disk space errors more efficiently.

Feature Enhancements

Incremental logging was added. Incremental logging will display the total number of files found in the changelist in both the IG log and the searchctl job view.

Archiveworker Redis Cache - Redis caching was added to optimize the handling of finished work, ensuring a more efficient and responsive experience. AW restarts can cause us to re-work non-committed events.

Fixed in 1.1.20

GC-2057 Job marked as a FAILURE due to Snapshot Extend step

Job marked as a FAILURE due to Snapshot Extend step. Specifically when the Snapshot Extend step is unable to determine the state of the job

GC-2614 Archivedfolder Checksum Value Reverting to Default(Off) After Upgrade

Following an upgrade, The checksum value unexpectedly reverts to its default state, making all ArchivedFolders added after the upgrade set to the default checksum value.

ecaadmin@mpgc6160-1:~> searchctl archivedfolders getConfig{ "getGoldenCopyConfig": { "rateLimit": "", "fullArchSnapExpiry": 25, "checksum": "DEFAULT" }}Workaround: Set it back to ON.

ecaadmin@mpgc6160-1:~> searchctl archivedfolder config --checksum ON

{

"data": {

"gcConfigure": {

"rateLimit": "",

"fullArchSnapExpiry": 25,

"checksum": "ON"

}

GC-2409 UI - Recall gauges - Skips counting against success %

The UI gauges may show a lower than expected Success % number if, during a Recall job, there were files that were skipped.

New in Cloud Browser for Data Mobility 1.1.20(10.05.2023)

New in 1.1.20

Feature Enhancements

Highlighted Folder Icons. The folder icon will be dynamically highlighted when the user selects folders or parent folders within the path selector tree.

New in Superna Eyeglass Golden Copy Release 1.1.19(09/01/2023)

New in 1.1.19-23189

Usability Enhancements

Backup notifications and configuration settings added.

Feature Enhancements

The function --follow when running jobs was added to follow that job’s progress within the same command. Existed for other jobs but now includes rerun.

The ecactl search notifications list is shown chronologically now.

Failed hardlink and softlink on rerun jobs are added to the rerun topic to re-download only failed files on a job.

AW skip existing files using ARCHIVE_WORKER_RECALL_SKIP_EXISTENT_FILES.

Fixed in 1.1.19-23189

GC-2404 Archivedfolders checksum setting resets to Default (Off) after a VM restart

If the checksum option has been changed to On. A VM restart will set it back to Default which is Off.

GC-2405 UI - Archivedfolders Tab - Edit not populating `Tier`, which seems to be a Requirement

When using the UI to edit an archivedfolders, the text field Tier is a requirement. And it might not be populated because the archivedfolders was added without specifying a Tier. For AWS the default tier is named DEFAULT.

GC-1897 Pipeline-Recall Does not skip files with the skip existent export

Existing Files are not getting skipped on the second run for pipeline recall job and instead getting duplicated.

GC-2035 Job Cancel - Job should not be a SUCCESS if cancelled at any step

Job Cancel - Job should not be a SUCCESS if cancelled at any step.

New in Cloud Browser for data Mobility 1.1.19-23189 (09/01/2023)

Feature Enhancements

Multipart copy between buckets for user bucket to staging buckets with cross-region support. Users can stage files from the source bucket to the staging bucket even if they are in different regions.

It is also implemented for copy-to-trash.

This also gets us over the AWS limit of 5G with the standard copy operation.

Fixed in 1.1.19 Cloud Browser for data Mobility 1.1.19-23189

CBDM-528 Email - SMB share path uses an Incorrect folder separator for the selected sub-folders

When a subfolder is selected instead of the root path of the SMB, this email line is incorrect

SMB Share Path \\<ip>\<smbName>/subfolder

It should be

SMB Share Path \\<ip>\<smbName>\subfolder

CBDM-532 Email Needs Attention Causing Job Failure due to Unconfigured SMTP Server

When attempting to add an email recipient on the user profile page, if the SMTP server is not properly configured, the email step fails. This failure, in turn, causes the entire job to fail, impacting the overall job status

CBDM-609 CBDM Browser - Buckets region being labelled incorrectly if there are other regions

Buckets region being labelled incorrect if there are other regions.

CBDM-615 Cloud to Premise - Submit SMB Target Button is obscured probably when the path is too long

When selecting an SMB target, the Submit button can be obscured depending on the length of the SMB target.

Workaround: After selecting the path, click outside the path picker, the picker should close, and the selected path should be selected for the Target.

CBDM-614 Cloud Browser - Unable to browse buckets in regions other than the one specified in Credentials

Unable to browse buckets in regions other than the one specified in Credentials.

CBDM-591 Skipping incremented from failing to move files to SMB due to the limit

The incorrect handling of files exceeds the limit when moving them to an SMB path. Instead of failing/getting errors, they are marked as skipped.

Supported OneFS releases

8.2.x.x

9.1.x.x

9.2.x.x

9.3.x.x

9.5.x.x (minimum 9.5.0.1)

New in Superna Eyeglass Golden Copy Release 1.1.17 (07/27/2023)

Fixed in 1.1.17

GC-1774 Json and Html report of recall/pipeline recall job is not showing accurate results for skipped files.

Json and Html report of recall/pipeline recall job is not showing accurate results for skipped files.

GC-2254 upgrade from 1.1.9 - after upgrading, the running job is not continuing to run

The archive job was running before the upgrade. Cluster down while the job is running.

after the upgrade, the job is not running; the job is not in running jobs and jobs history - files are not uploaded to the bucket.

GC-1687 Can't add AD user to admin for GC, when username contains few special characters.

Can’t add Ad user to admin for Gc, when username contains few special characters like

% ^ * + ` = {} [] \ | : ; “ <> , ?

GC-2399 Grafana - All Time Gauges - Negative % number

Seeing negative % number in the All-time graphs. Is it possible that if failure is higher than success, it could produce that negative % number?

GC-2237 Issue with login when there are spaces in the AD user name

The issue with login is when there are spaces in the AD user name

GC-1994 Pre Win2k usernames able to be added to Admin but fail at login

Pre Win2k usernames able to be added to Admin but fail at login

Fixed in 1.1.17 Cloud Browser for data Mobility 1.1.17

CBDM-412 Cloud to Premise - Parallel Jobs - Issues including Objects incorrectly Moved into a single SMB path

Parallel jobs seem to have issues with jobs run by the same user or different users.

CBDM-428 CBDM - files are recalled on wrong path instead of `ifs/fromcloud/CBDM` its recalling on `ifs/fromcloud/BDM`

Trailing slash / to recall source path breaks recall. Make sure --recall-sourcepath does not have a trailing slash

What’s New in Superna Eyeglass Golden Copy Release 1.1.16

Fixed in 1.1.16

GC-1879 Auto ReRun - Add Stats for job `ReRun - Folders` and Stats Issues

Auto ReRun - Add Stats for job `ReRun - Folders` and Stats Issues

GC-1905 Pipeline Recall with `--trash-after-recall` does not delete original files

Pipeline Recall with `--trash-after-recall` does not delete original files

GC-1697 UI - Editing archivedfolders after the first Edit does not close the Edit box - Changes applied

After editing an archivedfolder, trying to edit again (another or the same one) does not close the Edit box

GC-1748 ReRun of Incremental Rename does not work because missing info on previous name

ReRun of a failed Incremental Rename does not work because of missing info on the previous name. Every time a ReRun is initiated on this event, there is also an NPE.

New in Superna Eyeglass Golden Copy Release 1.1.15 (05/03/2023)

Fixed in 1.1.15

GC-1525 UI-Language change function is not working

The drop-down button on the language change is not functioning.

CBDM-235 Cloud to Premise - Jobs History - Skipped bytes added to Success bytes

The skipped bytes are added to the Success bytes.

CBDM-272 Job is marked as done without Recall if the Email is unchecked

For cloud to premise jobs (with email checked) the jobs are basically paused until a Recall job is run.

GC-1987 UI - Missing Edit and Add archivedfolders

Add/Edit of archivedfolders not available in UI.

GC-1205 Backup and recover functionality-Not able to fully recover the system

Backup and recover functionality-Not able to fully recover the system

GC-1880 When Auto-ReRun is triggered it can use the wrong job data (of another archivedfolders)

When Auto-ReRun is triggered it can use the wrong job data (of another archivedfolders)

GC-1749 Longpath - Incremental Rename did not update the JSON content - Recall downloaded old name

Renamed files (regular and hardlink) inside a longpath were not recalled with the new name.

New in Superna Eyeglass Golden Copy Release 1.1.14 (04/04/2023)

Fixed in 1.1.14

GC-1750 Longpath- Incremental Modify (Content) did not update the inode for Hardlink

Inside a longpath, after changing the content of a hardlink, the inode did not get updated on s3.

CBDM-234 Cloud to Premise - Jobs History and Running - Errors not counted

Errors are not counted in Cloud to Premise job data.

CBDM-262 Local admin is seeing a bucket without adding Credential

The local user sees a bucket when no Credentials have been added.

Local users should only be able to view jobs/history

GC-1700 Remove suffix of downloaded files

Able to download files inside a long path using Cloud Browser. However, the files have a suffix

New in 1.1.14

Version Based Recall

Date Based Recall on the UI is now available. During recall, users can now enter a date and recall the version of the object that was most recently uploaded before the selected date.

Usability Enhancements

Auto retry of failed folders and files with new job spawned at the end of archive job

--folder-only option added to re-run

Functionality --follow tag to archivedfolders recall is now

Cloud Browser for Data Mobility 1.1.14

Usability Enhancements CBDM

Moving data to CBDM share is available now.

Job’s affected files to CSV file for download can be exported now.

Faster jobs history table, with in memory cache

New in Superna Eyeglass Golden Copy Release 1.1.13 (03/06/2023)

Fixed in 1.1.13 Release

GC-1510 Non-admin is able to see all archivedfolders

Regression in the way archivedfolders are listed for non-admin users.

CBDM-213 Cloud to Premise - Jobs data for Running and History should show Target SMB

Running jobs and their history do not show where the Copy/Move is/was going into.

CBDM-190 Cloud to Premise - Keep objects with the same names during Staging

Keep objects with the same names during Staging

GC-970 Long Path Support - Rename fails possibly due to S3 Error that key is too long

New in 1.1.13-23045

Refer to previous 1.1.12 builds for what's new.

Supported Versions of S3 Targets

ECS 3.6, 3.7, 3.8

AWS S3 - all

Azure - all

Google Storage - all

Cohesity - contact support

Flashblade - contact support

MinIO - contact support

Wasabi - all

Ceph version 15

Supported S3 Protocol Targets

The supported S3 Protocol targets can be found in the documentation here.

Deprecation Notifications

Azure default Tier change

In the next release, the default tier for Azure upload will change from cold to hot. Tier-specific upload to Azure will require an advanced license.

HTML Summary Report to be deprecated

In the next release, the HTML report created using the export command will be deprecated and replaced by a job report that is created and downloadable from the Golden Copy GUI.

Support Removed / Deprecated in this Release

Recall the "metadata" option

The option to recall metadata only or re-apply metadata to a previous recall job is no longer available. The recall job can be run again if required.

New in Superna Eyeglass Golden Copy Release 1.1.12 (02/03/2023)

Fixed in 1.1.12 release:

GC-1078 UI - Pause and Start buttons have no action

Pause and Start buttons in the Archived Folder tab are not available.

GC-1513 Golden Copy special character regression for ECS, AWS

Golden Copy special character regression for ECS, AWS

GC-1070 job id is lost after upgrade to 1.1.11 so no jobs running/jobs view/job history and folder stats

GC-1069 Multi part files recall issue - Recall files much larger than the original

The system view tab works

New in Superna Eyeglass Golden Copy Release 1.1.11 (09/27/2022)

New in 1.1.11

GC-2 Treewalk Robustness

Added robustness to the treewalk process to handle errors and reduce duplication when there are issues in correctly retrieving data during the treewalk phase.

GC-138 Refresh GUI pages

Archive Jobs and Archived Folders pages now auto-refresh.

GC-337 Custom Metadata from application-specific files

Files can be optionally scanned during the upload process and custom metadata can be extracted and appended to the corresponding objects. This includes things like latitude and longitude from images, author from word documents, and more!

GC-341 Keep full history of file and object in objects as they're moved to the cloud

GC-373 Bugs

Miscellaneous bug fixes.

GC-586 Download file(s) to User pc from cloud browser

Users can now download individual files from cloud targets directly from their web browsers.

GC-762 Cloud file download from GCS for all file types

Fixed in 1.1.11

T23283/GC-396 Golden Copy GUI fully restricted for non-admin user

Non - admin users can login to the Golden Copy GUI and are fully restricted from admin functionality.

Non-admin user can see all folders configured and can add new folders. Impact: Impact for folder management only. Folder can be added and job can be started.

Non - admin user can recall files from Cloud Browser. Note: Cloud Browser is properly filtered based on the shares for logged in user.

Resolution: Golden Copy GUI URL should be provided to non- administrative users.

GC-562/563 Jobs view for large file is not accurate

For a job that contains large file, the jobs view is incorrect. Large file bytes archived is reported as less than was actually archived.

Resolution: The stats view command for the folder has full Archive.

Not available in 1.1.11

T17181 Archive to AWS Snowball

Archive to AWS Snowball is not supported with this optimized update release. This is planned in the coming update.

T17195 Upload to Azure, Cohesity, ECS or Ceph via Proxy

Azure, Cohesity, ECS or Ceph clients using http proxy are not supported in this update.

T16247 DR cluster alias / redirected recall

The ability to recall a different cluster than the original source cluster is not supported in this update.

Technical Advisories

Technical Advisories for all products are available here.

Known Issues

Known Issues Data Orchestration

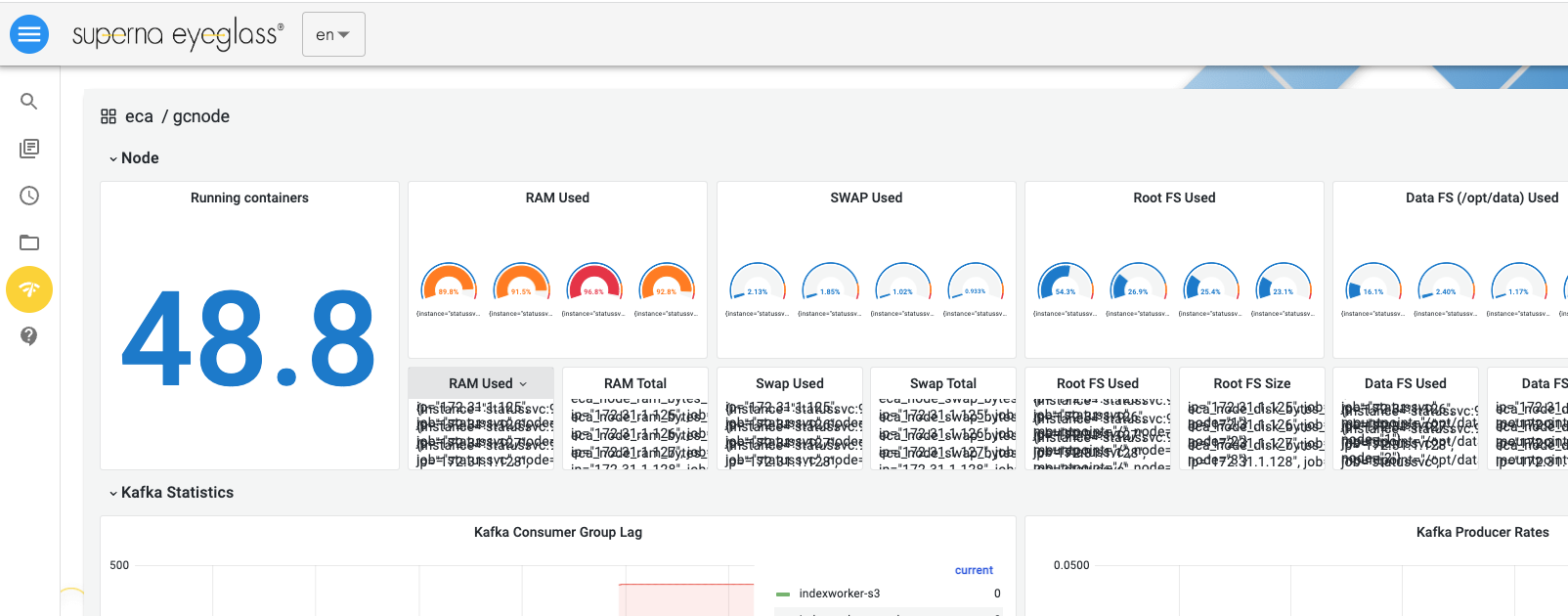

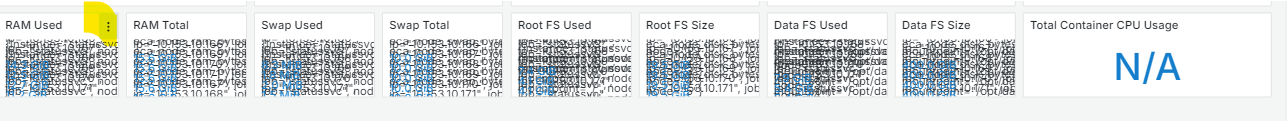

DO-1050 Some Grafana stats missing after upgrade to 1.2.2-25000

After upgrade to 1.2.2 build:

Some stats are missing on Grafana UI (CPU usage, Memory usage, Network RS, Network TX, IO read/write rate)

Workaround: run below command to check missing stats for each node

ecactl cluster exec 'ecactl stats'

- The RAM, Swap, Root and Data stats may appear compressed into a small section of Grafana dashboard and not clearly visible.

- Workaround: You can see the detailed values by expanding or zooming into the corresponding panel. Click three dots and select view option.

DO-1048 No error shown for Non-Existent Subfolder in Recall Operation

When using the --subdir option with a recall job, the path validation is not performed. Consequently, initiating a recall job with an incorrect path will result in an error.

Workaround: Ensure the correct path is provided when using the --subdir option to avoid this issue.

Known Issues Archiving

T16629 Azure upload error where name contains %

Upload of file or folder with name that contains % character to Azure is not handled and will fail.

Workaround: None available.

T18979 Incremental Archive issues for files with Zone.Identifier suffix

Under some conditions PowerScale will store files with a Zone.Identifier suffix. These files may be archived without meta-data or error on archive and not be archived at all.

Workaround: These files can be excluded from archive by adding " --excludes=*.Zone.Identifier" to the archivedfolder definition.

T19218 Setting to enable delete for Incremental archive not working

The system setting export ARCHIVE_INCREMENTAL_IGNORE_DELETES=false to enable deletes during incremental archive is not working. Deleted files on PowerScale are not deleted from S3.

Workaround: None available.

T19441/GC-543 Move/Delete operation in a single incremental sync orphans data in S3 target

Under certain circumstances where in the same incremental update there is a move or rename of a folder an empty folder is orphaned in S3 target. For example for a move or rename of a folder and a delete of a sub-folder, the folder move is properly updated on S3 target but the deleted sub-folder is not deleted in S3 target. For a delete and rename of 2 subfolders and edit of a file in the renamed subfolder, the renamed and deleted folders are not deleted on the S3 target.

Workaround: Orphaned folder can be manually removed from S3 target using native S3 tools.

T21370 No message when snapshot for upload from snapshot is not in Golden Copy inventory

The archive job, which specifies an existing snapshot as the source of the copy requires that the specified snapshot is in the Golden Copy inventory. If an upload job is started without the snapshot in the inventory no error message is displayed.

Workaround: Inventory runs automatically once a day at midnight. If the snapshot specified is not in the inventory, the message will indicate that the job was submitted but there will be no job-id. Ensure that a job-id is returned when starting this job.

T21745 s3update with a large number of changes may incorrectly delete folder objects with ACL information

Under some conditions, running the s3update command will result in the deletion of folder objects for folders that were not modified. This affects the folder object storing the ACL information only, the data itself is still present.

Workaround: None available.

T22925 Cannot use existing folder to copy from DR cluster

In the event that original cluster used to copy files to object storage becomes unavailable and a 2nd DR cluster is now being used, incremental updates to the originally uploaded objects is not available. Impact: Impact to incremental updates only. Recall of data uploaded from original cluster to DR cluster is available. Adding a new folder on DR cluster for upload is available.

Workaround: Readd the folder for the DR cluster. Requires full archive for the folder and then incremental.

T23126 Issues with full and incremental jobs for folders when S3 prefix is used

With ARCHIVE_S3_PREFIX configured there are following issues for folders:

-on incremental archive when a folder is deleted, the corresponding folder object in object storage is not deleted resulting in orphaned folder objects - workaround: folder objects must be deleted manually from object storage

-on full archive folder copy is not skipped when it should be resulting in additional unnecessary creation of folder objects - workaround: none required

GC-675 Archive job does not finish when tree walk fails at the beginning

When an archive job fails on all of the records in the treewalk stage, the job can hang indefinitely and must be manually cancelled.

Workaround: Cancel the job from the CLI using searchctl jobs cancel --id <jobid> and resubmit.

Known Issues Reporting

General Reporting Issues

T17647 After restart stats, jobs view, export report, cloud stats not consistent

After a cluster down/up or restart of indexworker, archiveworker or isilongateway containers there will be a mismatch in the stats.

Workaround: None available

T17932 searchctl jobs view or folder stats may be missing reporting on small percentage of files uploaded.

The searchctl jobs view command or folder stats command may not properly report all files uploaded to the S3 target.

Workaround: Verify file count directly on S3 target.

T19136 Jobs View / Export Report do not correctly calculate job run time if job is interrupted

For an archive job that is interrupted - for example cluster down/up while archive job is running - the jobs view and export report show a run time that is shorter than the true duration of the job.

Workaround: None available

T19466 Statistics may show more than 100% archived/attempted after a cluster down/up

If there is an archive job in progress when a cluster down/up is done, the job continues on cluster up but the jobs view and folder stats may show more than 100% for Archived and Attempted files.

Workaround: The archive job can be run again to ensure all files are uploaded. Any files that are already present on the object storage will show as a skipped statistic.

T21186 Statistics may not be accurate when there is a rename operation

If there is an archive job which includes a rename operation, the job and folder stats may not be accurate.

Workaround: Verify in object storage the correct files have been uploaded.

T21257 The 'file change count' column in jobs running always shows 0 for incremental archive

For incremental archive, the searchctl jobs view command will show the number of files in the changelist, but the searchctl jobs running command always shows a 0 count.

Workaround: Use the searchctl jobs view command to see the number of files in the changelist.

T21572 Export report for folder with multiple jobs may only produce 1 report

If multiple jobs have been run against a folder and then an export job is run for one of the jobs, a subsequent export job for a different job may not generate a report.

Workaround: Use the jobs view command to see the details of the job.

T21668 Copy from flat file does not report errors

On a copy from a flat file, where the copy encounters errors, jobs view and export incorrectly show the job as 100% success.

Workaround: Use folder stats or jobs view "Errors" stats to see error count.

T22523 Export command error json files may be missing files

The export command correctly reports then number of errored files in the HTML summary report but the corresponding json files may be missing some files.

Workaround: None available

T23450 Jobs Summary email report always shows running job

The Jobs Summary email report always shows running jobs even when there are no jobs running. When a job is running the running job count is increased accordingly.

Workaround: There are running jobs if the running job count is higher than the count when system is idle.

GC-385 jobs view and stats view incorrect for paused job that is resumed after a cluster down/up

A paused job that is resumed after a cluster down/up will successfully archive remaining files, but the jobs view and stats view will show incorrect statistics.

Workaround: None available. Inspect on the target to confirm all files have been archived.

GC-383 Pipeline recall stats may be incorrect

The stats view and jobs view may incorrectly show higher number of objects recalled than were actually recalled on pipeline job.

Workaround: Verify on the file system directly the number of objects that were recalled.

Recall Reporting Issues

T16960 Rerun recall job overwrites export report

Rerun of a recall job followed by exporting a report will overwrite any previous export report for that folder.

Workaround: Export report from a previous recall can be recreated but running the searchctl archivedfolders export command for the appropriate job id.

T18535 Recall reporting issue for accepted file count / interrupted recall

There is no stat for recall accepted file count. Also if a recall is interrupted during the walk to build the recall files, the job reports as success even though not all files were recalled.

Workaround: None available

T18875/T21574 Recall stats may incorrectly show errored count

Under some circumstances a recall job may show stats for Errors when in fact all files were successfully recalled.

Workaround: Use the searchctl archivedfolders errors command to check for errors. Manual count of files on the Powerscale may also be used to verify the recall.

T21561/T21577 Recall stats issues

- For a recall job where some files recalled had an error, the jobs view Accepted and Attempted stats are incorrect.

- Stats unrelated to the recall may get incremented: FULL/MULTIPART_FILES_ACCEPTED , FULL/MULTIPART_FILES_ARCHIVED

- jobs view Stats for Count (Recall), Count (Metadata), Errors (Recall), Errors (Metadata) may be incorrect

- stats view FULL/FILES_ARCHIVED_RECALLED may not be accurate

Workaround: Use the searchctl archivedfolders errors command to check for errors. Manual count of files on the Powerscale may also be used to verify the recall.

Known Issues Recall

T16129 Recall from Cohesity may fail where folder or file contain special characters

Recall of files or folders from Cohesity which contain special characters may fail. Job is started successfully but no files are recalled.

Workaround: None available

T16550 Empty folder is recalled as a file for GCS

Recall from GCS target of an empty folder results in a file on the PowerScale instead of a folder.

Workaround: If the empty directory is required on the file system it will need to be recreated manually.

T18338 Recall Rate Limit

Golden Copy does not have the ability to rate limit a recall.

Workaround: None available within Golden Copy.

T18428 Recall for target with S3-prefix result in extra S3 API call per folder

For S3 target that require a prefix for storing folders, on recall an extra S3 API call is made per folder. This API call results in an error but does not affect overall recall of files and folders.

Workaround: None required

T18600 Recall Job where recall path is mounted does not indicate error

No error is displayed if recall path is not mounted. In this case files may be downloaded to the Golden Copy filesystem which is not the requested end location and could also result in disk space issues on the Golden Copy VM.

Workaround: Ensure that mount for recall path exists prior to starting recall job. See information here on the mount requirements.T19438 Files may not be recalled

Under some circumstances files may not be recalled without any error indicated in Golden Copy.

Workaround: Files can be manually retrieved using S3 native tools.

T19649 Meta-data not recalled where AD user/group cannot be resolved

For case where files are uploaded and the owner or group was returned by the PowerScale API as Unknown User or Unknown Group because those owner/group no longer exist, on recall the Unknown User/Group cannot be resolved and block any other meta data from being applied.

Workaround: Meta data in S3 target can be used to confirm original meta data settings and manual steps on the operating system to apply them.

T21626 Recall Job for large number of files may get stuck

For a large recall job, Golden Copy may get stuck with files left to recall or once the recall job is completed.

Workaround: Contact support for assistance.

T23312 Recall --start-time and --end-time options do not recall any data

Specifying --start-time and / or --end-time option on recall does not return any results.

Workaround: If the data can be identified based on the object timestamp rather than the file metadata, the --older-than and/or --newer-than option can be used.

GC-652 Recall - Stats issue with multipart files

GC-2378 Pipeline Recall and CBDM - job view is intermittently showing `0` recalled Bytes

Run pipeline recall job. The job view is not showing recalled files size. It is showing 0B only.

In the stats, FULL/BYTES_ARCHIVE_RECALLED is showing 0B.

In the GC UI, the job history shows the recalled files size 0B.

GC-2801 Azure Recall - Unable to Recall files inside subfolder uploaded in 1.1.19 using 1.1.20 - Possible HTTP header issues

Azure Recall - Unable to Recall files inside subfolder uploaded in 1.1.19 using 1.1.20 - Possible HTTP header issues.

Known Issues Media Mode

T23160 Failed link archive are not recorded in stats

When there is an error on copy of a link, jobs view and stats view do not have any stats related to the errors.

Workaround: If jobs view has a difference between accepted and uploaded stats this is an indication of errors. For link errors, the errors command can be used to view the errors.

T22022 Error on incremental for link object with suffix

With Golden Copy configured to add an ARCHIVE_LINK_SUFFIX to links, incremental archive will result in error on delete and rename because the suffix is not applied.

Workaround: Link objects in this case should be deleted manually from object storage.

T22488 Object storage still contains file after all hard links and original file have been deleted

If all of the hard links to and related inode file are deleted, the the file is still present in the .gcinodes folder in the object storage.

Workaround: Object can be manually deleted from the inodes folder in object storage if required.

T22484 Deleting original hard link file may result in multiple copies of the file in object storage

If a hard link has been copied to object storage and subsequently the original file is deleted, the object storage link object may now stores the full original file as well as a copy of the file in the .gcnodes folder in object storage.

Workaround: Objects no longer required may be removed manually from object storage.

Known Issues Pipeline

TT22539 Move to Trash / Delete configuration is not working

With Pipeline configured with the recall folder using --source-path and the corresponding settings to enable --trash-after-recall option, objects either downloaded successfully or skipped on recall do not get deleted or moved to recycle bucket.

With Pipeline configured with an archive folder using --delete-from-source and the corresponding system settings enabled, files that are successfully copied are deleted but files that are skipped are not.

Workaround: Objects/files on source must be manually removed .

Known Issues Golden Copy GUI

T23150 Error on adding or editing archivefolder has no details

When there is an error on adding or editing an archivefolder the message provided is "Error occurs in graphQL request" without any details as to what the actual error was.

Workaround: Browser developer tools can be used to see the details of the graphql output.

T23076 No validations when editing folder from Golden Copy GUI

When editing a folder from the Golden Copy GUI there are no checks for valid input on save. Impact: invalid settings can corrupt folders and block functionality.

Workaround: Manually verify inputs for accuracy.

T22851/T23145 Golden Copy GUI Folder Management GUI Issues

When using the Golden Copy GUI to add/edit/delete folders there are the following GUI issues:

- fields are not cleared after changing cloud type selection - workaround: manually clear or overwrite previously entered values

- path field does not allow copy/paste - workaround: use path selector

- summary window does not show scrollbar - workaround: resize window

T23002 Golden Copy Cloud Browser filters not displayed

After selecting Filter Results Files or Folders in Cloud Storage Browser, the GUI does not show that a filter is applied.

Workaround: None required.

T22385 Golden Copy GUI shows Search product on some pages

On the login page and on some tabs once logged in the product name "search" is displayed instead of "goldencopy". No impact to functionality.

Workaround: None required.

GC-607 Cloud Browser - support self signed certificates on local targets

Known Issues General & Administration

T16640 searchctl schedules uses UTC time

When configuring schedule using searchctl schedules command time must be entered as UTC time.

Workaround: None required

T21073 Phone home may fail when archive folder path contains characters 'id'

Phone home may file if there is an archive folder configured path that ends in 'id' - for example: /ifs/data/patientid

Workaround: None available.

T22913 Licensing of Powerscale can be lost

A sequence of operations that deletes and readds clusters may result in licenses being lost on a cluster down/up.

Workaround: Contact support.superna.net for assistance.

T23382 Verbose archived folders list shows properties no longer used

The searchctl archivedfolders list --verbose property shows "recallTargetCluster" and "recallTargetPath" that are no longer used.

Workaround: None required.

GC-1119 some file uploads get errored during the upgrade.

GC-2233 GoldenCopy - Running a second job with skips enabled causes the job not to complete

The initial full job runs without issues and comes to completion but the second full when ran skips files until the job is canceled.

GC-2387 Incremental - Count Accepted is different than Archived possibly due File_Rename

Accepted (count) differs from Archived (count) for incremental jobs. Looking at the jobs view, there are fewer accepted than archived.

GC-2692 Large Changelists Max Out Isilongateway RAM

Multiple large change lists from incremental jobs will cause the Isilongateway container to restart.

Workaround: Spread incremental schedules out to allow more time in between the incremental jobs, check the change list on OneFS, and if over 1M changes are being found, run a full job on the folder instead of an incremental job.

DO-425 Grafana data not collected for CPU and memory usage

Grafana UI may not show CPU and Memory usage.

Workaround: Use ‘docker stats’ to monitor both CPU and memory.

DO-424 The CBDM Move job got Stuck, didn't complete

When running parallel CBDM move job, the jobs may get stuck and not be completed successfully

Workaround: Run a single CBDM move job at a time

DO-431 Archiveworker Restarting During Large File Processing on GCS

When attempting to execute an archive job for GCS with a dataset >5000 files, the job runs into the following issues:

1. Archiveworker container restarts during upload, but the job eventually completes, and files are uploaded to GCS

2. The job takes longer to finish, even with a small set of files

3. Stats are not correct

Workaround: none

Known Limitations

T15251 Upload from snapshot requires snapshot to be in Golden Copy Inventory

Golden Copy upload from an PowerScale snapshot requires snapshot to be in Golden Copy Inventory. Inventory task is run once a day. If you attempt to start archive without snapshot in inventory you will get error message "Incorrect snapshot provided".

Workaround: Wait for scheduled inventory to run or run inventory manually using command: searchctl PowerScales runinventory

T15752 Cancel Job does not clear cached files for processing

Any files that were already cached for archive will still be archived once a job has been cancelled.

Workaround: None required. Once cached files are processed there is no further processing.

T16429 Golden Copy Archiving rate fluctuates

Golden Copy archiving rates may fluctuate over the course of an upload or recall job.

Workaround: None required.

T16628 Upgrade to 1.1.3 may result in second copy of files uploaded for Azure

In the Golden Copy 1.1.3 release, upload to Azure replaced any special characters in the cluster, file or folder names with "_". In 1.1.4 release the special characters are handled so that a subsequent upload in 1.1.4 wil re-upload any files/folders because the names are not identical in S3 to what was uploaded in 1.1.3. If the cluster name contained a special character - for example Isilon-1 - then all files will be re-uploaded.

Workaround: None

HTML report cannot be exported twice for the same job

The HTML report cannot be run again after having been previously executed.

Workaround: None required. Use the previously exported report.

T16250 AWS accelerated mode is not supported

Golden Copy does not support adding AWS with accelerated mode as an S3 target.

T16646 Golden Copy Job status

When viewing the status of a Golden Copy Job it is possible that a job which has a status of SUCCESS contains errors in processing files. The job status is used to indicate the whether the job was able to run successfully. Then the searchctl jobs view or searchctl stats view or HTML report should be used to determine the details related to the execution of the job including errors and successes.

T17173 Debug logging disk space management

If debug logging is enable, the additional disk space consumed must be managed manually.

T18640 searchctl archivedfolders errors supported output limit

The searchctl archivedfolders errors command support output limit is 1000.

Workaround: For a longer list use the --tail --count 1000 or --head --count 1000 option to limit the display.

T19305 Queued jobs are not managed

Golden Copy executes up to 10 jobs in parallel. If more than 10 jobs are submitted remaining jobs are queued and waiting to fill a job slot once it becomes available. Queued jobs are not visible through any commands such as command for running jobs and they do not survive a restart.

Workaround: On restart any jobs that were queued will need to be restarted. Tracking is available for the 10 jobs that are running and jobs history for jobs that are completed.

T20379 Canceled archive job continues upload

For an archive job that is cancelled while the phase of walking the filesystem is still in progress, the filesystem walk continues after the cancel and if another archive job on the same folder is started while the original snapshot is still present files from both snapshots will be uploaded. Impact: Any files that are uploaded twice will be skipped if they are already present and uploaded if not. Order of upload is not guaranteed.

Workaround: Please contact support.superna.net for assistance should this situation arise. Planned resolution in 1.1.6.

GC-1504 Parallel jobs limited to 10 at a time

If more than 10 are submitted to run (manual, scheduled), the rest will eventually run as well/queued.

GC-1527 Cloud Browser - Single File Download - Support for downloading symlinks that points to a hardlink

Summary Single File Download support for Links (hard and sym):

Single file download of a symlink downloads the file it is linking to if it exists on the bucket. Tested Ok.

Single file download of hardlink file (json+inode) tested Ok. Tested with a single and multipart file.

GC-1625 Incrementel Delete not working for deleted Symlink possibly due to suffix

Symlink that was deleted was not deleted after incremental possibly to using suffix export.

GC-2789 3 Nodes : Folder stats empty `searchctl stats view --folder <folderID>` and `searchctl archivedfolder stats --id <folderID>`

In some environments (noticed on HyperV test env) with lots of latency, users unable to retrieve stats using searchctl archivedfolders stats --id or searchctl stats view --folder.

GC-2804 Phonehome Logs Upload Issue on HyperV OVF 3-Node

After successfully sending a heartbeat on Phonehome, the 'logsupload' command appears to be broken.

GC-2450 Backup and Restore Kafka topic's content

Summary of what is not restored after a backup and Restore as of 1.1.17-23170 v15, single node:

checksum: Expected to be restored. Need to be fixed.

Kafka topics' content. This is expected; no fix is planned. Impact: Tools that rely on data from Kafka topics will show empty results. Example tools:

system alarms/notifications (searchctl notifications list).

job error details (searchctl archivedfolders errors).

Fast Incremental Known Limitations

- mode bit metadata information is not available in fast incremental mode

- owner and group are stored in numeric UID and GID format in the object header

- PowerScale API only available for OneFS 8.2.1 and higher

- Owner and Group meta-data are not recalled where objects were uploaded with fast incremental due to a bug in PowerScale API they cannot be recalled. Recall should be done without meta-data. There may still be meta-data errors on recall without meta-data, which can be ignored.

Move/Rename identification and management in object storage known limitations

- PowerScale API only available for OneFS 8.2.1 and higher for updating S3 target with new location of folder and files and removing folder and files from old location

- For OneFS version lower than 8.2.1 move/renamed objects cannot be identified due to PowerScale API issue and these will be orphaned in S3 target.

Backblaze target requires https access

When configuring folder for Backblaze https access must be used, http is not supported.

T20868 Cannot run incremental update for same folder to multiple targets

If incremental update for same folder is run in parallel to multiple targets, only 1 incremental job will run. This impacts incremental update only, parallel full archive for the folder to multiple targets does not have this issue and both complete successfully.

T21258 Version based recall uses UTC time for inputs

When specifying the --newer-than or --older-than options for version based recall, UTC time must be used.

T21759 Unable to export recall job multiple times

After an export of a recall job, the same job cannot be exported again.

Workaround: None available.

T21925 Export reporting limit

When running an export report to view errors or files uploaded, the number of records reported on is limited to 7 million records.

Workaround: Contact support.superna.net if you require reporting on more records. For overall reporting on a job use the Golden Copy GUI or CLI jobs view command.

T22680 Media Mode Hardlink mode may copy more than 1 copy of the file

For the case where there are multiple hardlinks to the same file, due to parallel processing there may be multiple instances of the the real file uploaded until the completion of the copy of the real file is known to all Golden Copy nodes.

Workaround: None available. For a large number of hardlinks to the same file, the duplicate files uploaded is expected to be far less than a very large number of links.

T22469 Media mode does not copy broken symbolic links

A symbolic link that is broken for example due to a renamed or deleted file is not uploaded by Golden Copy.

Workaround: None available.

T22630 Media mode may orphan hardlink objects when all Golden Copy nodes are not able to archive

In the event that a multi-node Golden Copy deployment has nodes that are not able to copy for any reason, it may result in either orphaned inode objects or associated hard link objects due to copy for the partner object being assigned to the node that cannot copy.

Workaround: None available

T23130 Media mode has no stats for broken symlink

If there are broken symlinks encountered during a Golden Copy job, there are no statistics to track this occurrence.

Workaround: None available

GC-433 PowerScale may return success for recalled file even if PowerScale blocks saving the file

In some cases PowerScale has an issue where it returns a success message for a recalled file even though a on the recall directory on the PowerScale has blocked the save of the file.

Impact:

- recall statistics may incorrectly show success for files that are not actually saved on the filesystem

- Pipeline 'trash-after-recall' option will delete the object due to success message from PowerScale even though the object has not been successfully recalled.

GC-459 Recall error for multi-part file leaves incomplete file on file system

For the case where a multi-part recall fails, the error in recalling the file is properly tracked in the statistics and in the errors command, but the partial incomplete file is left behind on the filesystem.

Impact: If configured to skip recall for files already downloaded this may result in a skipped file.

Workaround: Use error command to determine errored files and remove them manually from the filesystem.

GC-450 Golden Copy - Traceback on cluster up

On cluster up, an error and associated stack trace is printed to the console. This error is benign and can be ignored.

Workaround: none needed.

GC-446 Stats conversion from bytes incorrect

Download Single File from Wasabi Cloud Type, in design says only us-east-1 region is supported.

GC-874 Audit - Job is stalled after hitting exactly 50k files

GC-938 Incremental - Only the original hardlink is updated possibly due to changelist limitation

GC-1020 Cloud Browser - Unable to download file from Wasabi

GC-1308 Increase/Customize/Remove the 1000 error limit

- Login to Web UI.

- Go to Tab Archive Jobs

- Scroll down to Archive Jobs Errors you will see a list of archivedfolders

- If you click one it will list the errors.

- The limitation: It can only display the last 1000 errors of any job on that archived folder.

Cloud Browser for Data Mobility

Known Issues Cloud Browser for Data Mobility

CBDM-168 Wasabi - Unable to add archivedfolders from CLI without using https://

Wasabi used to be able to be added without using the https prefix. Now it's crashing with String index out of range: -1

CBDM-512 CSV Export - Special characters probably causing the columns not being populated correctly

Special characters cause the columns not being populated correctly. Using build 1.1.16-23119 multinode v15.4, AWS, Ofs9501

CBDM-322 Cloud to Premise - Recall phase is not skipping existing files