Search & Recover & Golden Copy Archiving Solution

- Solution Overview - How to use an Archive Staging Area With Search & Recover and Golden Copy

- Deprecation Notice

- How to locate aging data for Archive With Search and Recover

- How to move the files to the Archive Staging Area

- How Recall data for Users

- Solution Overview - How to archive data in place with Search & Recover and Golden Copy

- Requirements

- How to locate aging data for Archive With Search and Recover

- Copy Search results file to Golden Copy for Archive

- Delete the Data Using Search & Recover

- Recalling Archived Data

Solution Overview - How to use an Archive Staging Area With Search & Recover and Golden Copy

This work flow requires both products to use this work flow to provide an archive solution with S3 or Azure blob storage. This work flow would require users to request access to data that has been archived and a recall job created to return data to the cluster. This solution is a bulk archive solution aimed at doing large clean up every few months.

Deprecation Notice

- As of 1.1.4 the search & recover csv format will be deprecated with a 1.1.4 build to support a format of CSV import that uses only an absolute path of /ifs/... to a file to be copied. This will allow customer scripts or command builder feature in Search & Recover to generate a simple csv or flat file with only path to files to be copied by Golden copy.

- Required 1.1.4 build > 21124

How to locate aging data for Archive With Search and Recover

- Typically files age based on modified or last access are common criteria to locate data

- Login to search

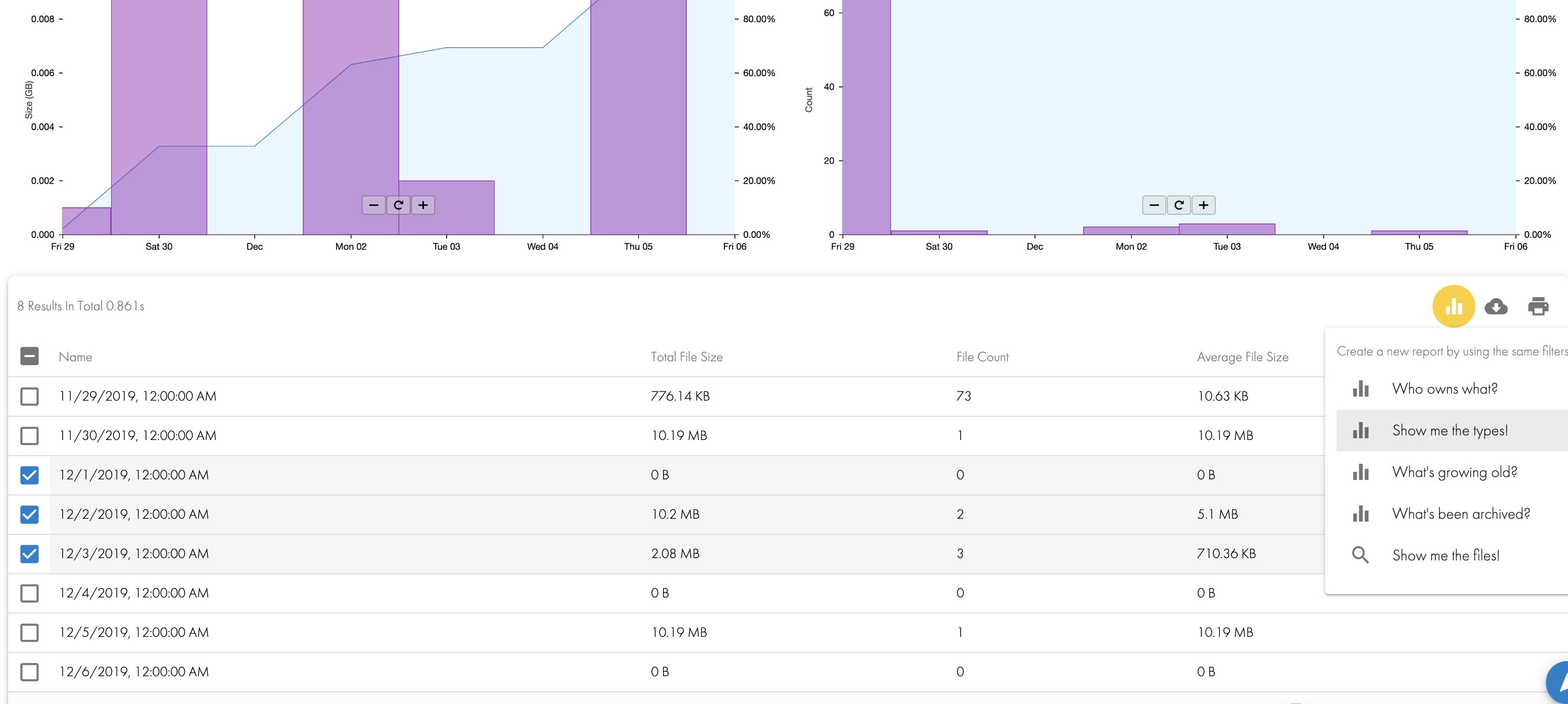

- Open Quick reports and What's Growing old?

- Move the results to Archive Staging Area.

- Create /ifs/archivestaging

- Use this Guide to move data from the search result list to archive staging area. Use the target path above to replace the examples used here

- Repeat the above steps when you want to clean up data and move to an S3 bucket. The example script copy above has the option to move data to the staging area. This solution will also retain the original path of the file under the staging folder base path. example /ifs/archivestaging/ifs/data/moveddata

How to move the files to the Archive Staging Area

- On the Golden Copy VM at the /ifs/archivestaging to an archived folder definition. See quick start examples here.

- Configure the folder for copy mode

- Run the copy job

- Verify all data was successfully copied by viewing the job report or the running job.

- searchctl job view --id <job name > --follow

- searchctl archivedfolders export --id <job name >

- Delete the data under /ifs/archivestaging

How Recall data for Users

- If a user request to recall data comes is received you can view the S3 bucket with Any S3 browser tool to locate the path of the files.

- Start a recall job on Golden Copy vm to recall the data back into the staging area. Example

- searchctl archivedfolders recall --id <folder id> --subdir /ifs/archivestaging/ifs/path-to-user-data

- Once the recall job finishes you can create an SMB share on top of the recalled data to provide access to the recalled data.

- Summary:

- This keeps the data in the original location that the user last had access to it

- The staging area moves data into an area that is easily copied and managed seperate from the file system.

- Users must request data back and this allows monitoring of data access requests.

Solution Overview - How to archive data in place with Search & Recover and Golden Copy

This solution keeps the data in place , meaning it does not use a staging area to copy or move data to the archive. Data can be recalled back using Golden Copy recall feature. NOTE: Recall will not place the data into the same location it was archived from. The data will be recalled to the staging area but the relative file path will be preserved.

Requirements

- Search & Recover

- Golden Copy

- S3 Target Storage

How to locate aging data for Archive With Search and Recover

- Typically files age based on modified or last access are common criteria to locate data

- Login to search

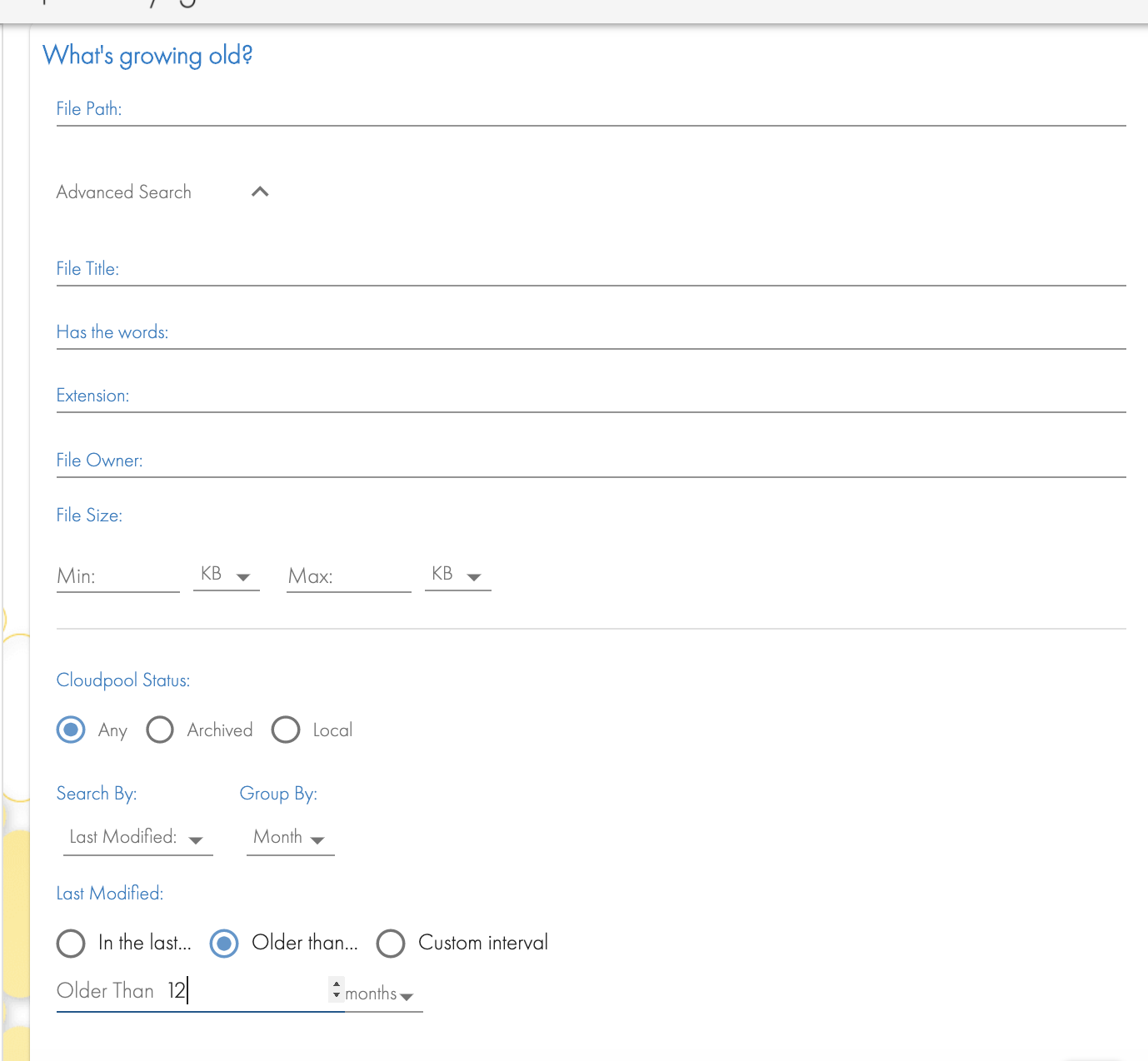

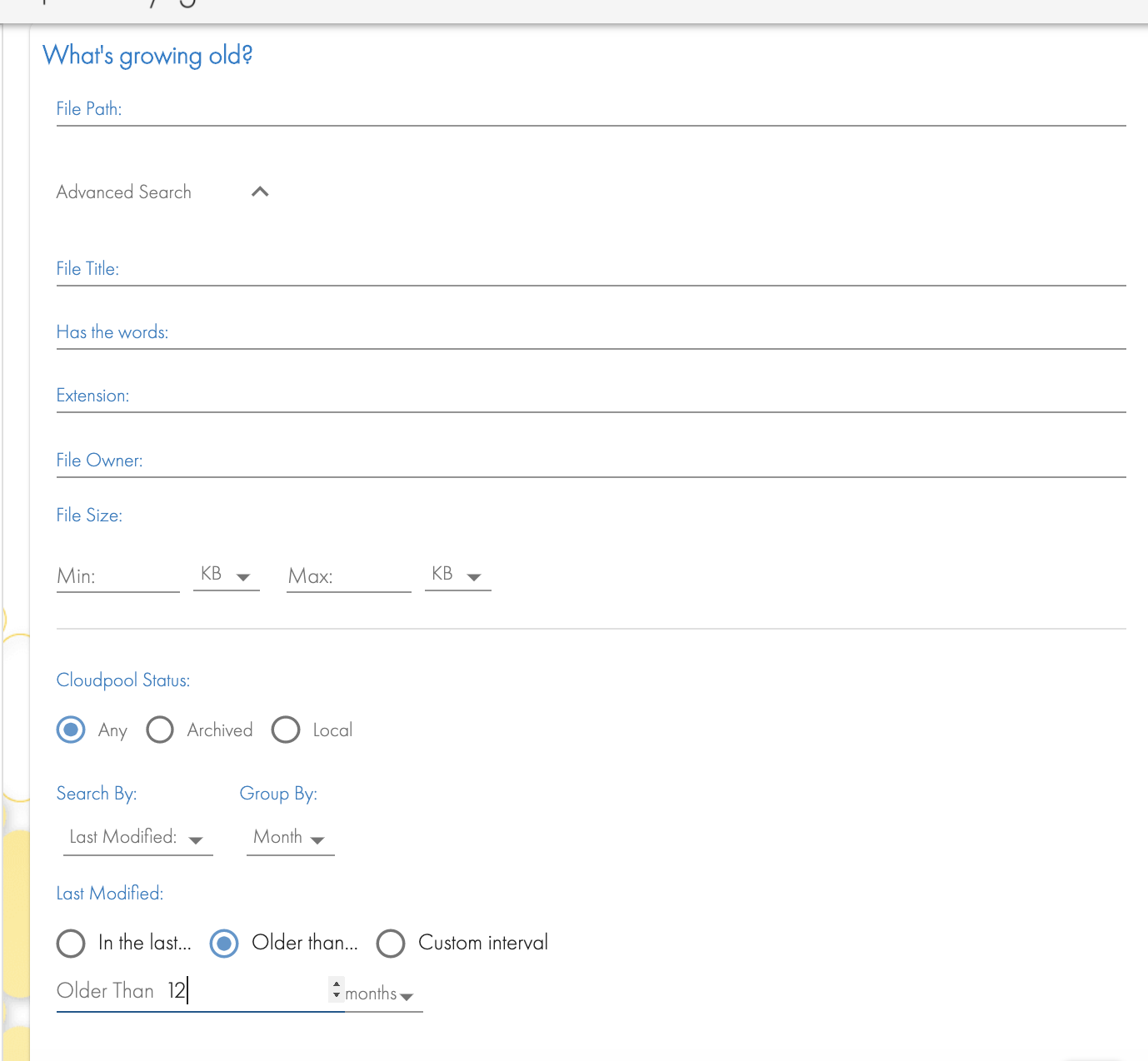

- Open Quick reports and What's Growing old?

- The resulting table will show the month and year with data that meets the age search criteria. NOTE: You may enter a path to narrow the search to a specific path in the file system as well as using other search criteria in the Advanced window of the What's Growing Old? Quick Report.

- Select the months or rows in the table and select the menu option "Show me the files"

Copy Search results file to Golden Copy for Archive

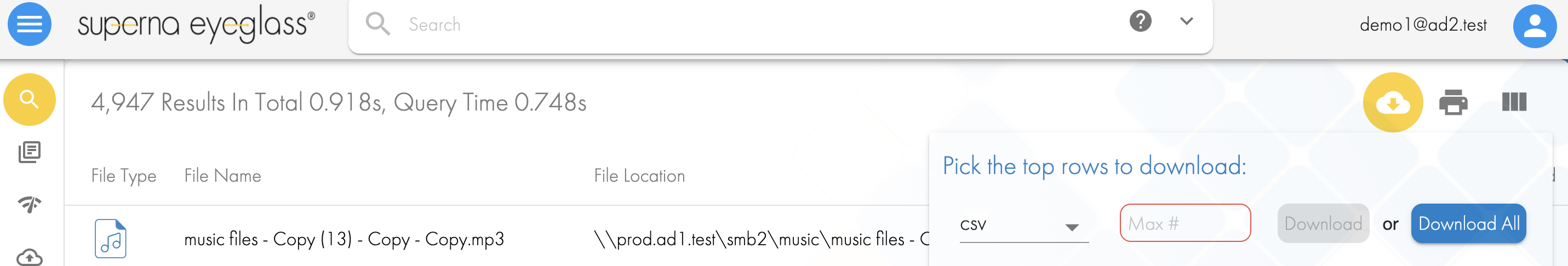

- From the files results list you will need to save the files to a CSV file that can be used with Golden Copy to archive the files in the search list. NOTE: You may edit the CSV to delete files that should not be archived.

- Due to depreciation notice the CSV file must be edited and delete all columns except the file path /ifs/.... this new simpler format will be the only format supported. Command builder feature allows export a search result to a file with path to file one per row file with a carriage return after each row in the file.

- Using winscp to copy the saved csv file from Search to Golden Copy node 1 and save the file in /opt/superna/var/csvimport

- Authenticate as the ecaadmin user.

- Run an archive job and specify the csv file

- searchctl archivedfolders archive --id 3fc3795a731daef8 --uploads-file /opt/superna/var/csvimport/igls_search_1606924303.csv

- Monitor the copy job progress as normal

- Done

Delete the Data Using Search & Recover

- OPTIONAL - If the objective is to delete the aged files from the file system follow this steps.

- Login as an admin user to get the command builder UI option in the UI when running the steps above.

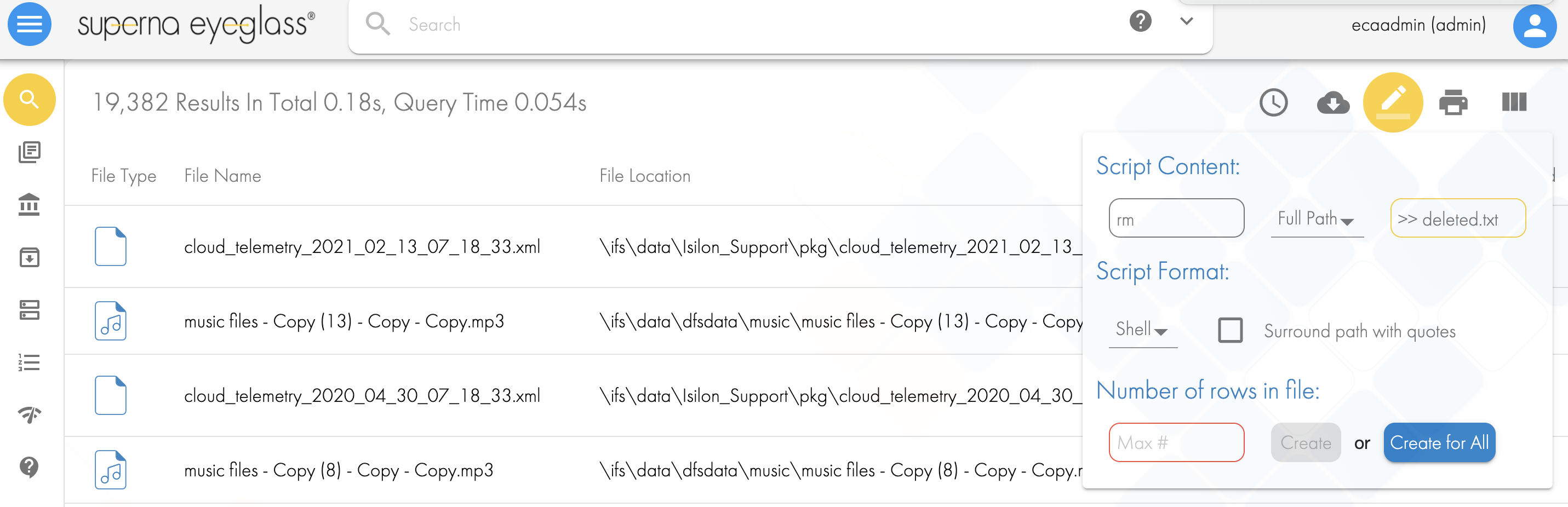

- Use the script builder icon and enter rm ssh command into the box one and then log the delete to a file in the second box as per screenshot. This will create a bash script with each file in the file to delete the files in the results.

- Copy this bash script to the Isilon, any location is ok.

- Make the file executable chmod 777 filename.sh

- Make sure you are logged in as a user with permissions to delete files regardless of the ACL's example root.

- Execute the script command store the log somewhere as a record of the deletion.

- NOTE: The data copied will have the same path in the object store as existed on the cluster file system.

Recalling Archived Data

The data that was archived will not be required in most cases. If the recall of the data is required. The Golden copy recall feature can recall the archived data based on path only to the recall staging area. The data can be shared from this location or copied back into the original file location. The recall staging area retains the absolute file path when it is recalled.

See the recall instructions here.