- Monitor DR Readiness

- SyncIQ Audit Monitor - Verify Data is Replicating

- Overview

- Requirements

- How it Works

- Configuration

- Policy Readiness and DFS Readiness

- Policy/DFS Readiness - OneFS SyncIQ Readiness

- New Validations in Release 2.0 and later

- Previous Failed DFS failover share prefix

- Policy Source Nodes Restriction

- SyncIQ Policy Status Validations

- Quota Domain Validation

- SyncIQ File Pattern Validation

- Corrupt Failover Snapshots

- Duplicate SyncIQ Local Targets

- Target Writes Disabled

- Policy/DFS Readiness - Eyeglass Configuration Replication Readiness

- Policy / DFS Readiness - Zone Configuration Replication Readiness

- Zone and IP Pool Readiness

- Zone and IP Pool Readiness - OneFS SyncIQ Readiness

- New Validations in Release 2.0 and later

- New 2.5.6 or later releases

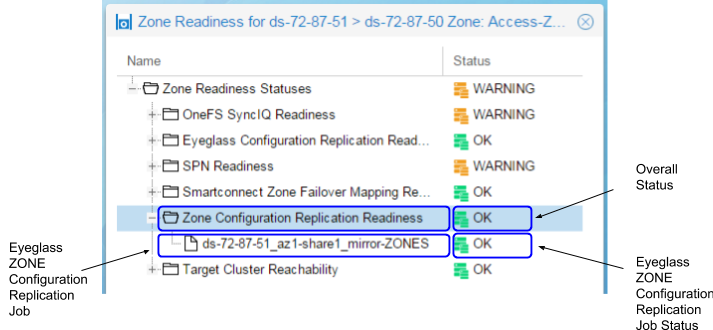

- Zone and IP Pool Readiness - Zone Configuration Replication Readiness

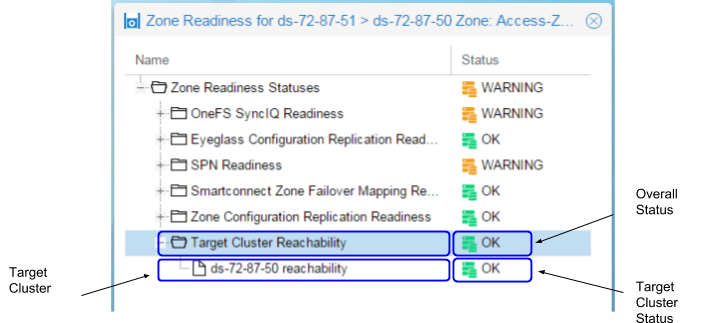

- Zone and IP Pool Readiness - Target Cluster Reachability

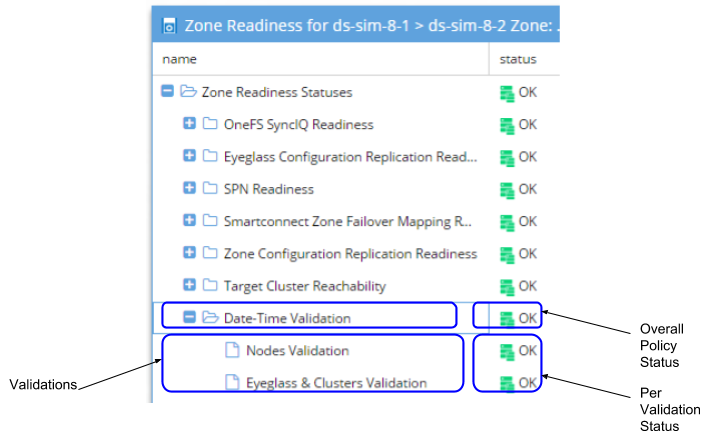

- Zone and IP Pool Readiness - Date-Time Validation

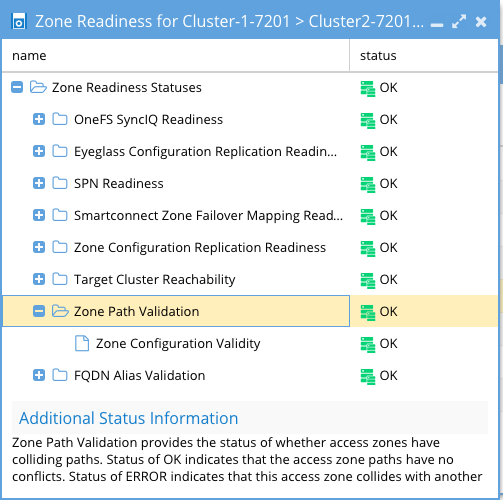

- Zone and IP Pool Path Validation

- Access Zone and IP Pool FQDN Alias Validation

- Access Zone and IP Pool - SPN (Service Principal Name) Active Directory Delegation Validation

- Prerequisite: 2.5.6 or later

- Documentation to Correct warnings

- SPN AD Delegation Example

- SPN test Failure Conditions and Error Messages

- Access Zone and IP Pool - DNS Dual Delegation Validation

- Prerequisite: 2.5.6 or later

- Documentation to correct Warnings

- UnSupported Configurations

- Example Dual DNS validation

- Warnings and Statuses for all Possible Dual Delegation Validations

- Pool with ignore alias igls-ignore-

- Zone or pool with correct settings:

- SmartConnect Zone name/alias unknown:

- One NS record for SmartConnect Zone name/alias:

- Detected three NS records for SmartConnect Zone name/alias:

- One of the NS record is incorrect for SmartConnect Zone name/alias:

- There is no NS records for SmartConnect Zone name/alias:

- Two NS records point to same IP addresses for SmartConnect Zone name/alias:

- SSIP of source cluster (in cluster) is incorrect:

- All Failover type Domain Mark Validation

- Prerequisite: 2.5.6 or later

- Documentation to correct Warnings

- IP Pool Failover Readiness

- Pool Validations

- Pool Readiness Validation

- Un-mapped policy validation Overview

- Overall pool validation status

- How To Configure Advanced DNS Delegation Modes Required for Certain Environments

- What's new

- How to change the values

- How the tags work

- How to Configure Advanced SPN delay mode for Active Directory Delegation

- How to Disable AD or DNS Delegation Validations - Advanced Option

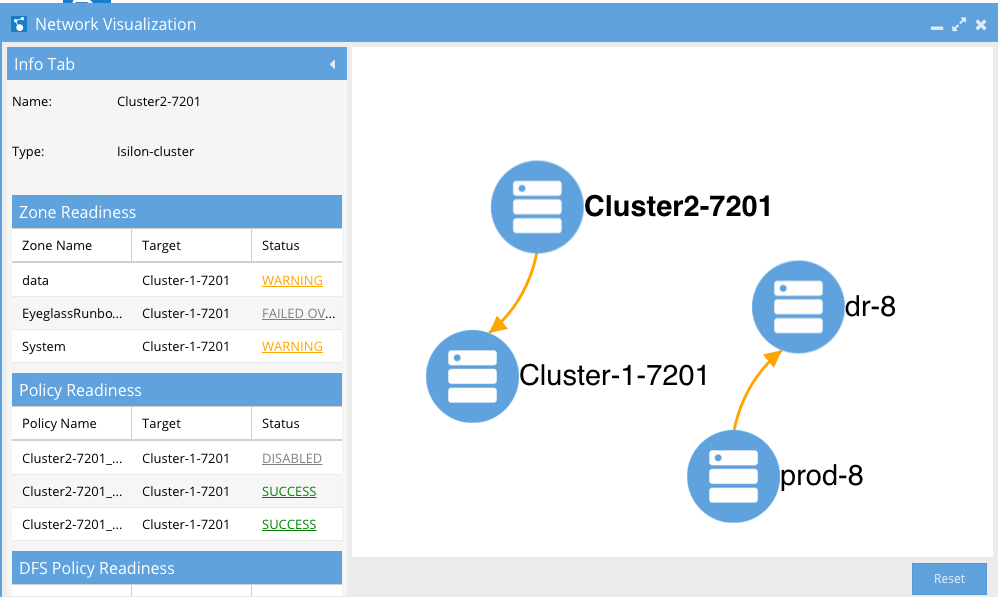

- Network Visualization

Monitor DR Readiness

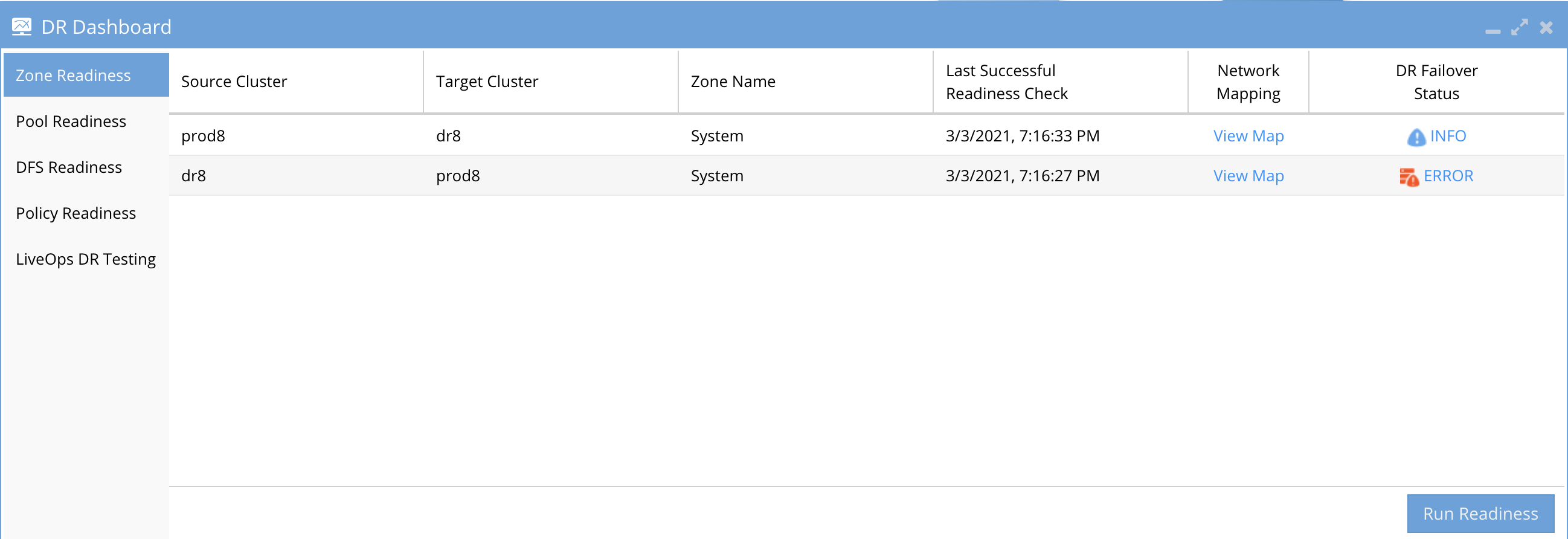

To view and manage your DR readiness, use the DR Dashboard window:

Policy Readiness: Provides a view of your readiness for SyncIQ Policy Failover.

Zone Readiness: Provides a view of your Access Zone Failover Readiness.

IP Pool Readiness: Provides a view of your IP pool failover Readdiness.

DFS Readiness: Provides a view of your readiness for a SyncIQ Policy DFS Mode Failover.

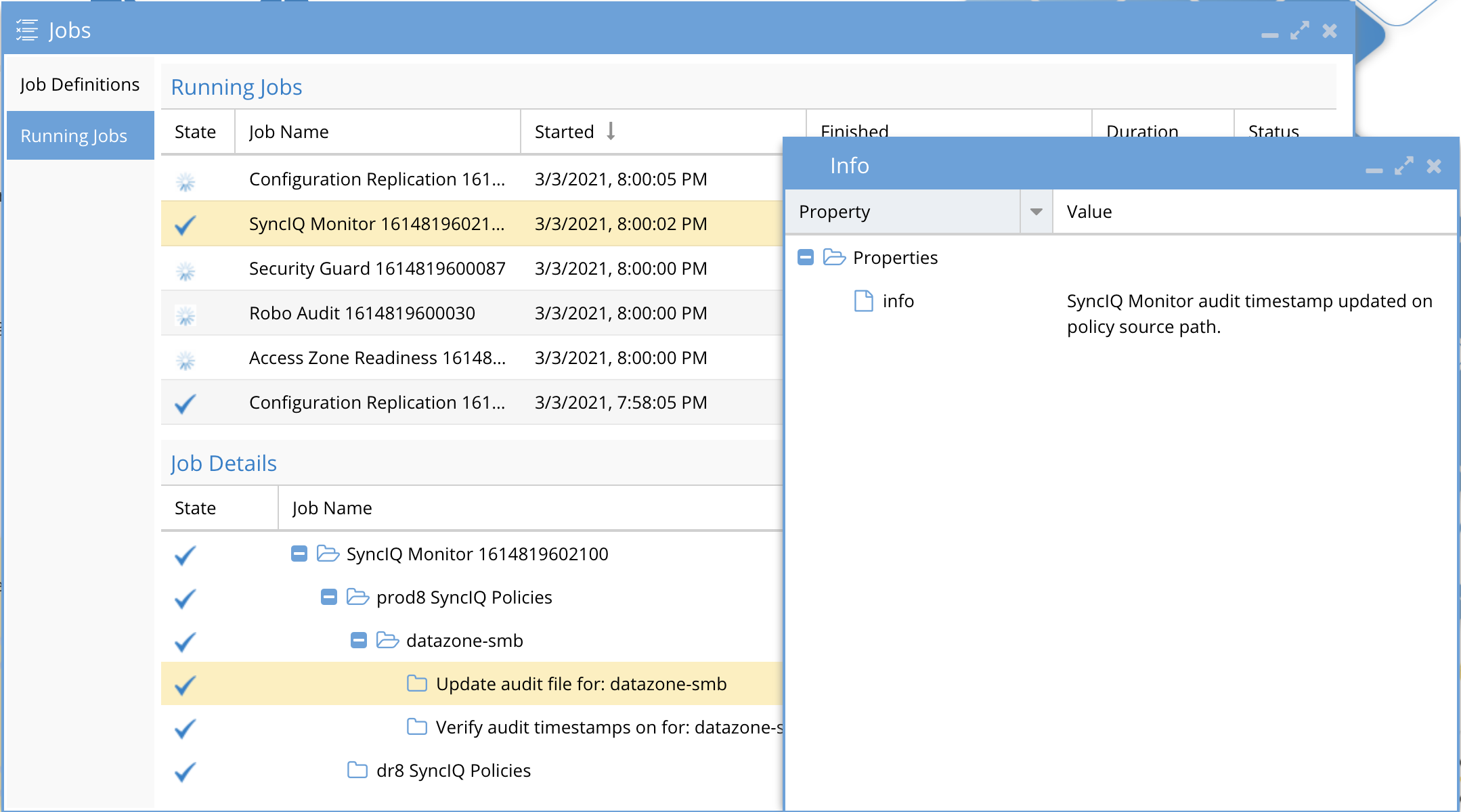

SyncIQ Audit Monitor - Verify Data is Replicating

Overview

Audits SyncIQ data sync by adding test data with timestamps to the source cluster and compares when synciq runs, and verifies timestamps on the target cluster. This provides the highest level of confidence in your off site data. The job is disabled by default and requires configuration.

Requirements

- 2.5.7 or later

How it Works

- A test file is created in the hidden directory with timestamp using the cluster file api

- SyncIQ policies are monitored when they run successfully

- After SyncIQ runs the remote DR cluster is checked with the file api to read the file and check the timestamp matches the source cluster.

- This validation must pass, if it fails an alarm is raised to flag a data sync issue has been detected.

- If the validation passes the job completes without any alerts being raised.

- Successful Job monitor on a single SyncIQ policy configured for monitoring.

Configuration

To monitor a policies data integrity the following step need to be completed on each synciq policy. You can configure on any policy by following these steps. Only the policies that require monitoring need to follow these steps. All other steps are automated after completing these steps on a policy.

- Login to the source cluster as the root

- Create a hidden folder at the base of the synciq policy path example if the policy path is /ifs/data/userdata/smbdata

- mkdir -p /ifs/data/userdata/smbdata/.iglssynciqmonitor (not the . is required)

- chown eyeglass:wheel /ifs/data/userdata/smbdata/.iglssynciqmonitor (allows the eyeglass service account to create a test file in this folder)

- Verify the synnciq monitor job is enabled

- ssh to eyeglass as admin

- sudo -s

- igls admin schedules set --id SyncMonitorTask --enabled true

- igls admin schedules (verify the job is enabled and default schedule is setup for hourly)

- If the monitor detects an issue with data sync an alarm will be raised

Policy Readiness and DFS Readiness

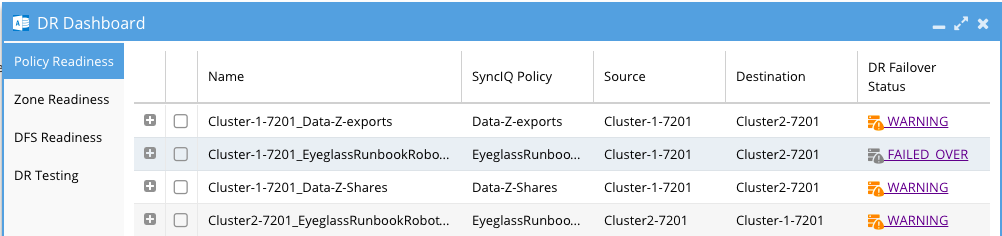

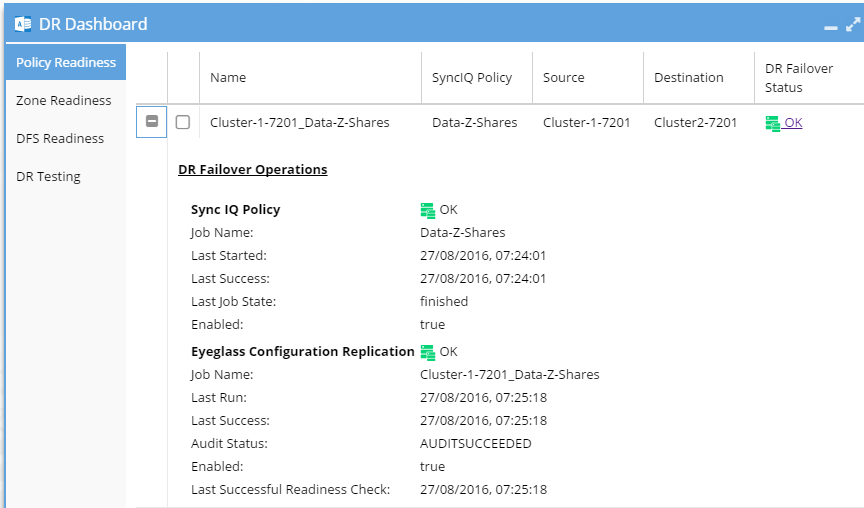

The DR Failover Status on the Policy Readiness or DFS Readiness tabs provides you with a quick and easy way to assess your Disaster Recovery status for a SyncIQ Policy Failover (non-DFS mode) or SyncIQ Policy Failover (DFS mode).

Each row contains the following summary information:

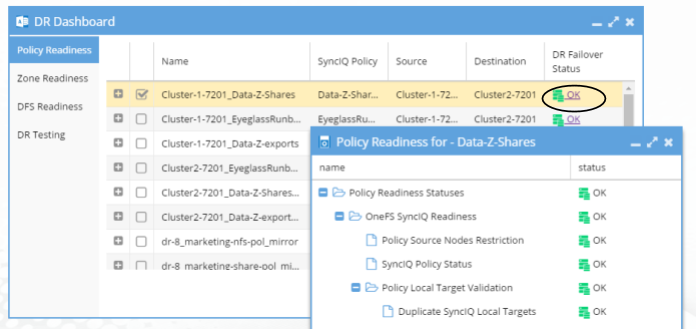

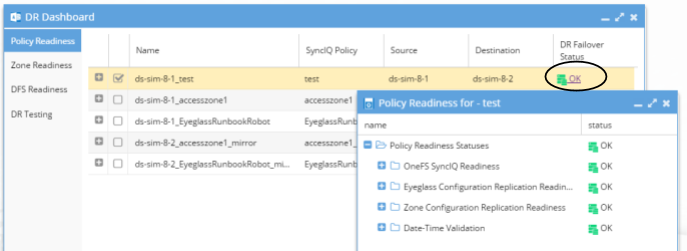

Click on the DR Failover Status link to see the details for each of the failover validation criteria for a selected Job.

The DR Failover Status will be one of the following:

The DR Failover Status is based on Status for each of the following areas for Policy and DFS Failover:

Additional information for Policy / DFS Readiness criteria is provided in the following sections.

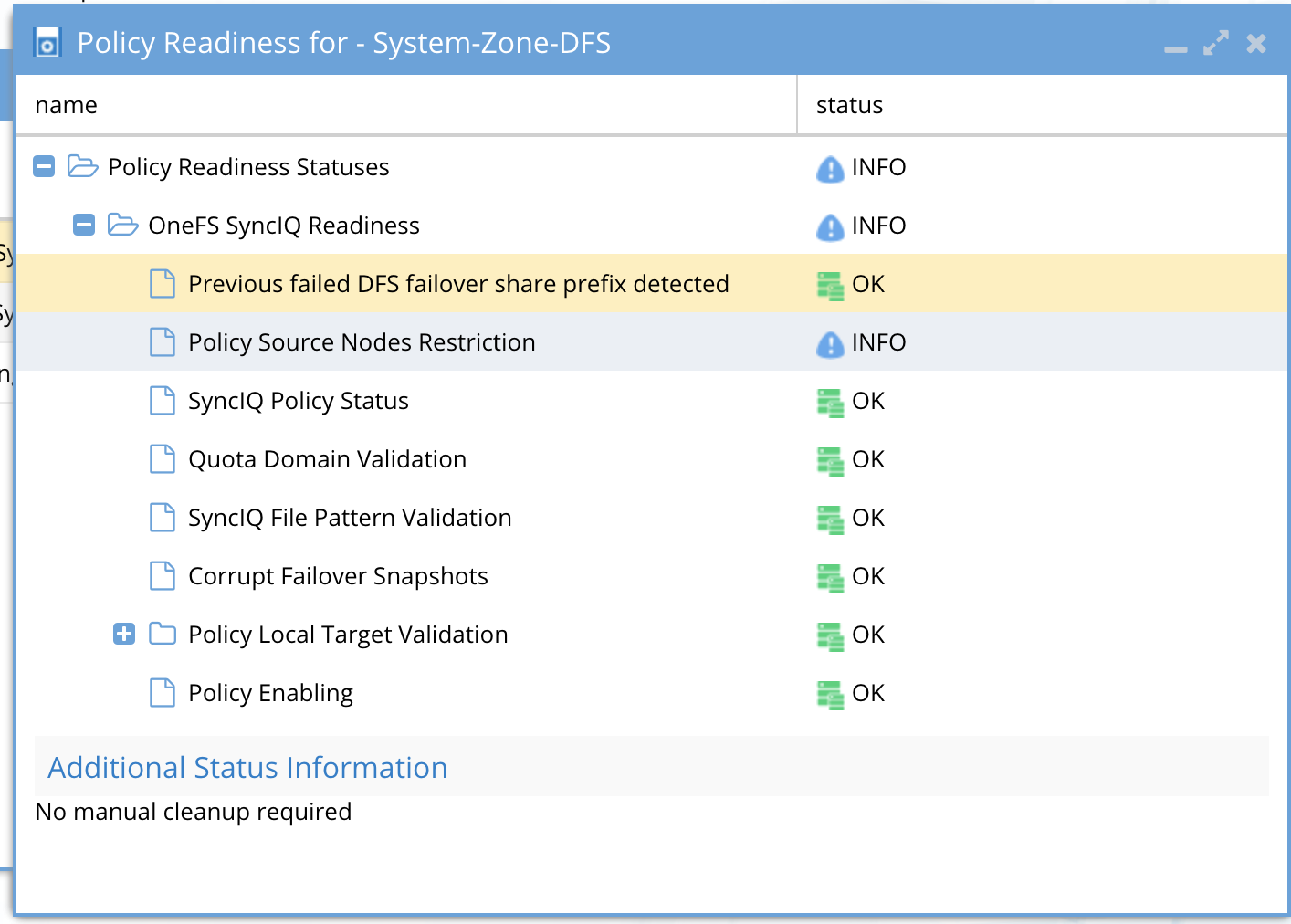

Policy/DFS Readiness - OneFS SyncIQ Readiness

The OneFS SyncIQ Readiness criteria is used to validate that the SyncIQ policy has been successfully run and that it is in a supported configuration. For each SyncIQ Policy the following checks are done:

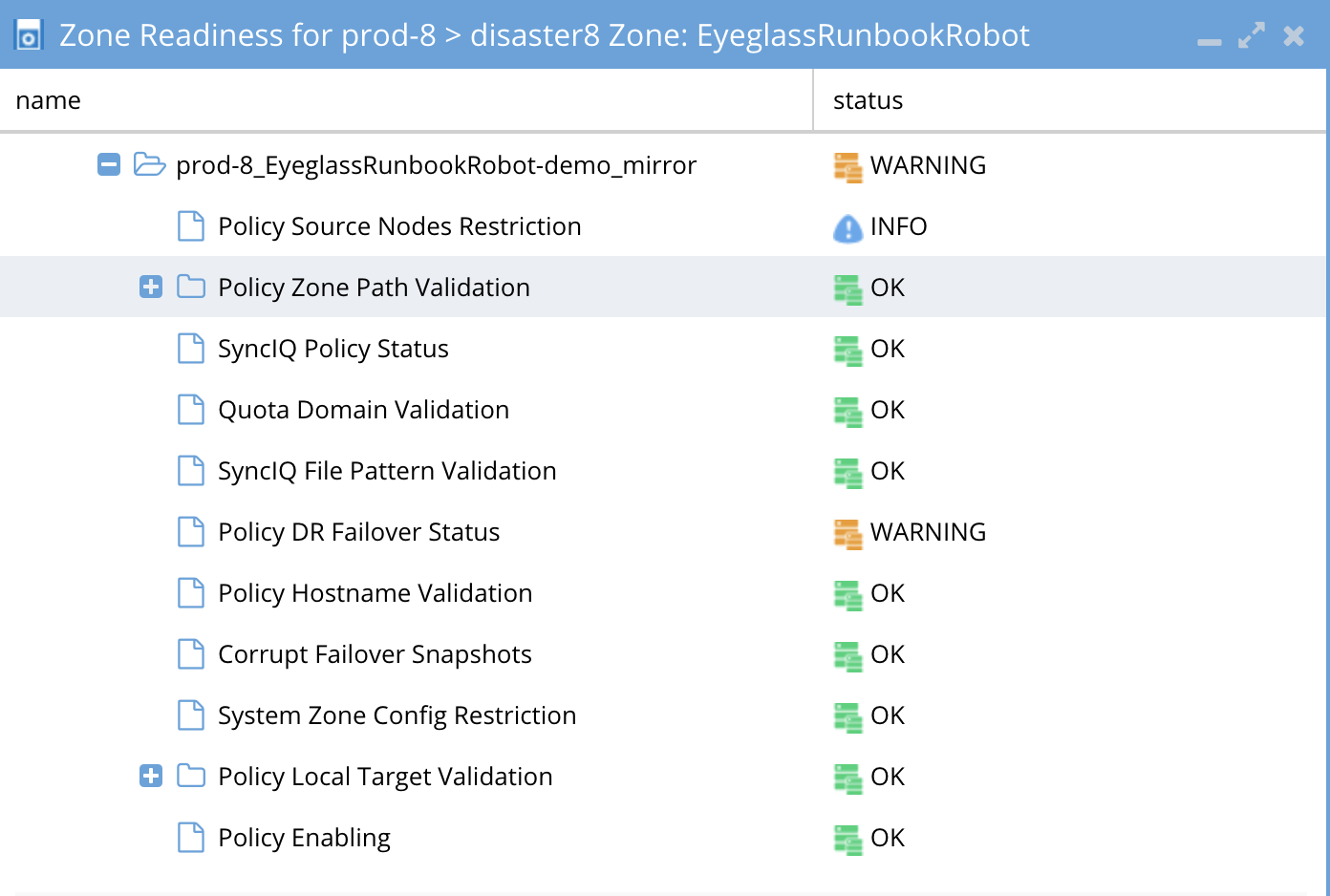

New Validations in Release 2.0 and later

| SyncIQ Policy: | Notes: |

Previous Failed DFS failover share prefix | This detects any prefixed dfs shares on the active cluster. The active cluster should not have any prefixed share on the SyncIQ policy. This validation will indicate if prefixed shares are detected that should be cleaned up prior to any failover. |

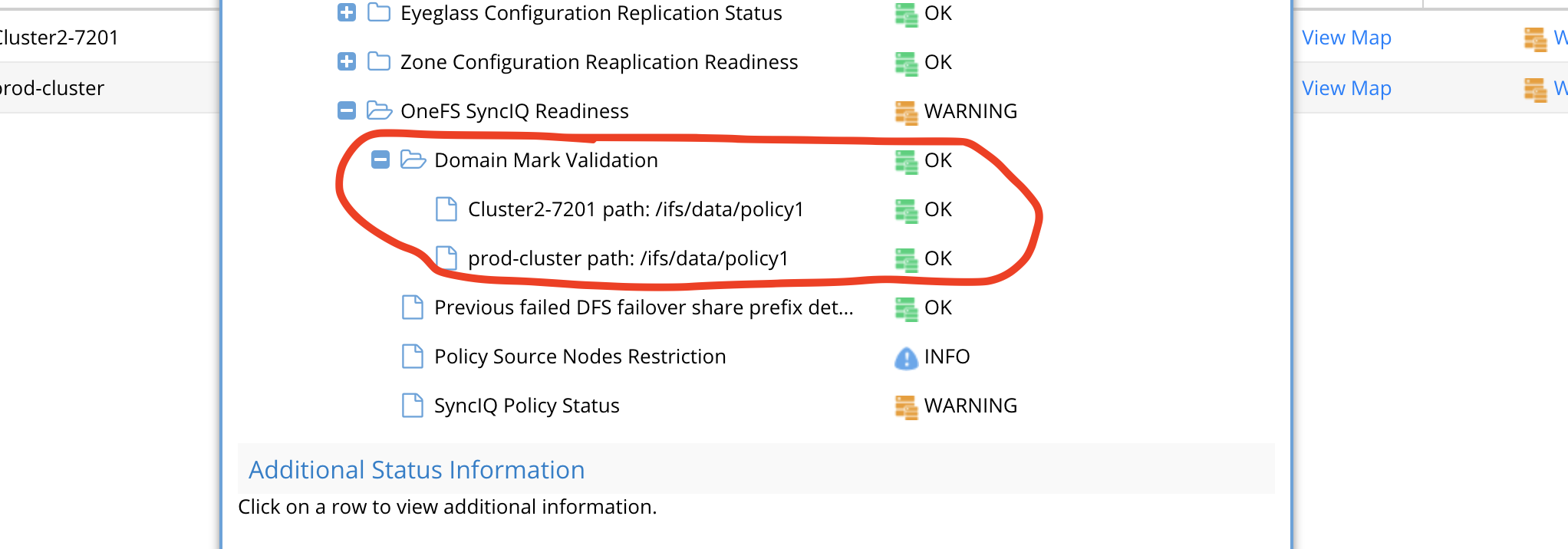

Domain Mark Validation | Domain Mark validation applies to all failover types and is described here. DR Status is Warning when the validation fails. Failover should not be started with this validation warning. |

Policy Source Nodes Restriction | Validate PowerScale best practices that recommend that SyncIQ Policies utilize the Restrict Source Nodes option to control which nodes replicate between clusters. |

SyncIQ Policy Status Validations | DR Status is “OK” when all of the conditions below are met:

DR Status is “Warning” when the condition below is met:

IMPORTANT: SyncIQ Policy in Warning state MAY NOT be able to be run by Eyeglass assisted failover depending on it’s current status. If not run, you will incur data loss during failover.

You must investigate these errors and understand their impact to your failover solution. DR Status is “Disabled” when either of the conditions below are met:

|

Quota Domain Validation | Detects a quota with needs scanning flag set. This flag will fail SyncIQ steps (run policy, Make Writable, and Resync prep) for any policy with a quota that has not been scanned and is missing a quota domain. Failover should not be started with this validation warning. Quota scan job should be run manually or verify if a quota scan job is in progress. |

SyncIQ File Pattern Validation | SyncIQ policies with file patterns set cannot be failed back and any files that do not match the file pattern will be read-only after failover. This file pattern is not failed over by Resync prep to mirror policies and Eyeglass does not support copying file access patterns to mirror policies. This setting should not be used for DR purposes. This validation will show warning for any policy with a file pattern set. The file pattern should be removed from the policy to clear the warning. |

Corrupt Failover Snapshots | Validate that Target Cluster does not have an existing SIQ-<policyID>-restore-new or SIQ-<policyID>-restore-latest snapshot from previous failovers/synciq jobs for the Policy. |

Policy Local Target Validation :

| Validate that there is only 1 Local Target per SyncIQ policy. |

Policy Local Target Validation:

| Validate that the target folder of SyncIQ policy has writes disabled. |

| Policy Enabling | Validate that the SyncIQ Policy is enabled in OneFS. If Disabled overrall DR Status is Disabled. |

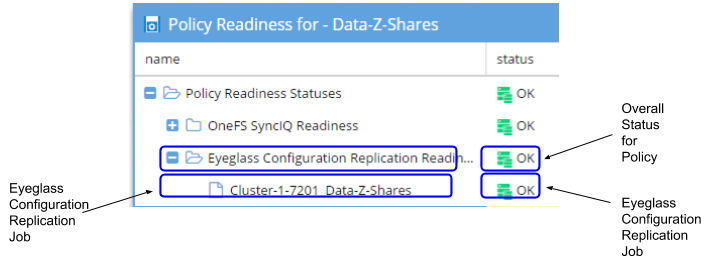

Policy/DFS Readiness - Eyeglass Configuration Replication Readiness

The Eyeglass Configuration Replication Readiness criteria is used to validate that the Eyeglass Configuration Replication job related to the SyncIQ Policy has been successfully run to sync the related configuration data. For the Eyeglass Configuration Replication Job the following check is done:

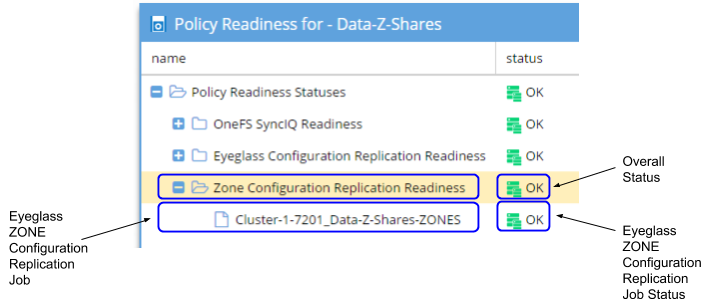

Policy / DFS Readiness - Zone Configuration Replication Readiness

The Zone Configuration Replication Readiness criteria is used to validate that the Zone Configuration Replication job related to the SyncIQ Policy has successfully run. This is in order to create a new target cluster Access Zone for configuration sync completion.

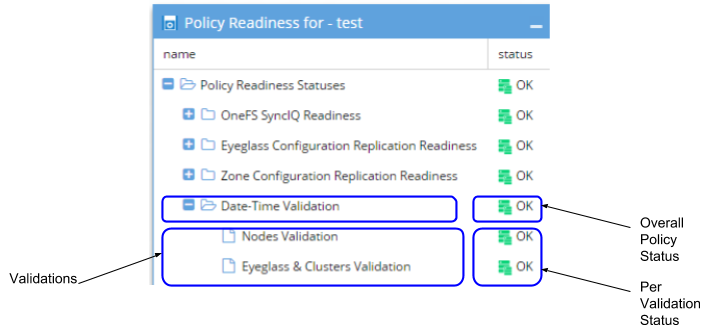

Policy/DFS Readiness - Date-Time Validation

The Date-Time Validation is used to validate that the time difference between the cluster nodes, and between clusters and Eyeglass, are within an acceptable range that will not affect SyncIQ operations. SyncIQ commands, like re-sync prep, can fail if the time between cluster nodes is greater than the time between the Eyeglass VM and the cluster with regards to latency of issuing the API call (a scenario where a node returns a timestamp for a step status message is earlier than the beginning of the Re-sync prep request). API calls can return different completion times between clusters. Differences here can cause re-sync prep failover commands to fail, if the difference between Eyeglass and the source cluster is greater than the time it takes for a resync prep command to complete.

NOTE: This condition under which timing differences cause resync failover commands to fail is rare and hard to detect manually. In release 1.8 Eyeglass can detect this condition. It's best practice to use NTP on clusters and the Eyeglass appliance. This allows failover logs and the new feature in release 1.8 or later to collect cluster SyncIQ reports for each step and append to the failover log. This will make debugging multi step multi cluster failover simpler. This process will require time to be synced.

For each Cluster the following checks are done:

DR Dashboard Job Details

Each Policy or DFS Job can be expanded in the DR Dashboard Policy Readiness or DFS Readiness view to see Job Details:

DR Dashboard Configuration Replication Job Details

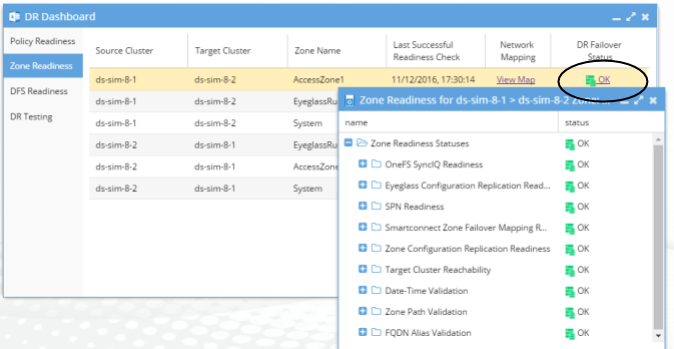

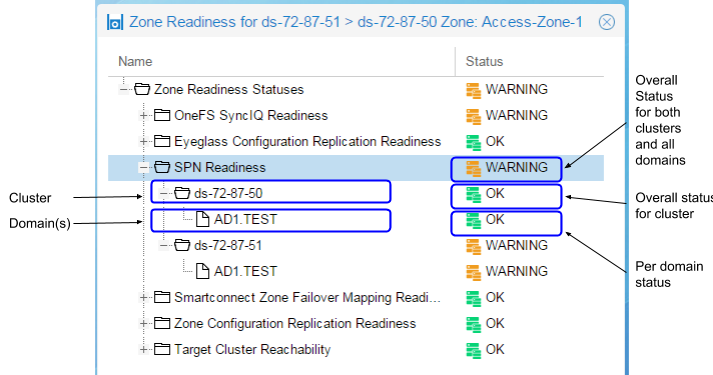

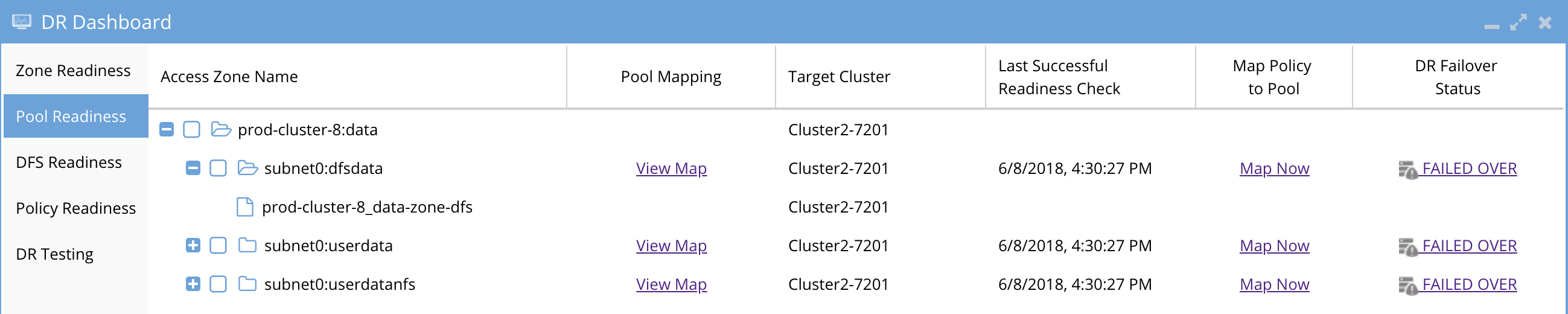

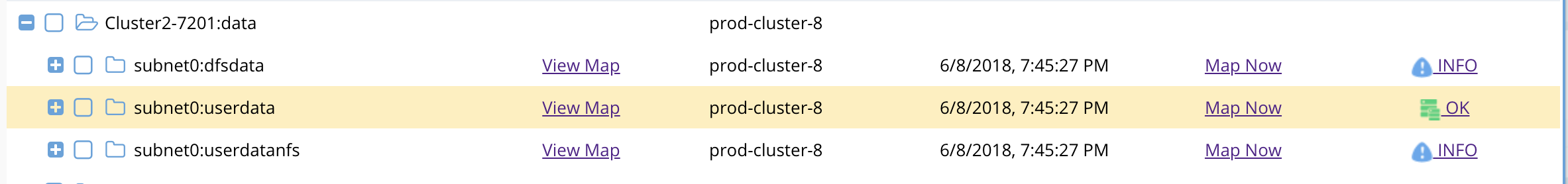

Zone and IP Pool Readiness

The Zone Readiness DR Failover Status provides you with a quick and easy way to assess your DR Status for an Access Zone Failover. The Zone Readiness check is performed for both directions of a replicating PowerScale cluster pair to ensure that you have your status, not only for failover but also for failback.

The Zone Readiness Status will be one of the following:

| Status | Description |

| OK | All Required and Recommended conditions that are validated by Eyeglass software have been met. |

| WARNING | One or more Recommended conditions that are validated by Eyeglass software have not been met. Warning state does NOT block failover. Review the Access Zone Failover Guide Recommendations to determine impact for recommendations that have not been met. |

| ERROR | One or more of the Required conditions that are validated by Eyeglass software have not been met. Error state DOES block failover. Review the Access Zone Failover Guide Requirements for failover to determine resolution for these error conditions. |

| FAILED OVER | This Access Zone on this cluster has been failed over. You will be blocked from initiating failover for this Access Zone on this Cluster. |

IMPORTANT: Not all conditions are validated by Eyeglass software. Please refer to the Eyeglass Access Zone Failover Guide for complete list of requirements and recommendations.

Notes:

- For the case where the Target cluster pool that has the Eyeglass hint mapping for failover does not have a SmartConnect Zone defined:

- On Failover the Access Zone will be in Warning state due to SPN inconsistencies.

- On Failback it will not have the FAILED OVER status displayed.

- For the case where there is no Eyeglass Configuration Replication Job enabled in an Access Zone there will be no entry in the Zone Readiness table for that Access Zone.

- Until Configuration Replication runs, Policy Readiness for a policy in the Access Zone will be in Error.

The DR Failover Status is based on Status for each of the following areas for Access Zone Failover:

IMPORTANT

By default the Failover Readiness job which populates this information is disabled. Instructions to enable this Job can be found in the Eyeglass PowerScale Edition Administration Guide.

Note: If there are no Eyeglass Configuration Replication Jobs enabled there is no Failover Readiness Job.

Preparation and planning instructions for Zone Readiness can be found in the Access Zone Failover Guide:

Requirements for Eyeglass Assisted Access Zone Failover

Unsupported Data Replication Topology

Recommended for Eyeglass Assisted Access Zone Failover

Preparing your Clusters for Eyeglass Assisted Access Zone Failover

Additional information for Zone Readiness criteria is provided in the following sections.

Zone and IP Pool Readiness - OneFS SyncIQ Readiness

The OneFS SyncIQ Readiness criteria is used to validate that the SyncIQ policies in the Access Zone has been successfully run and that they are in a supported configuration. You will find one entry per SyncIQ Policy in the Access Zone. For each SyncIQ Policy the following checks are performed:

New Validations in Release 2.0 and later

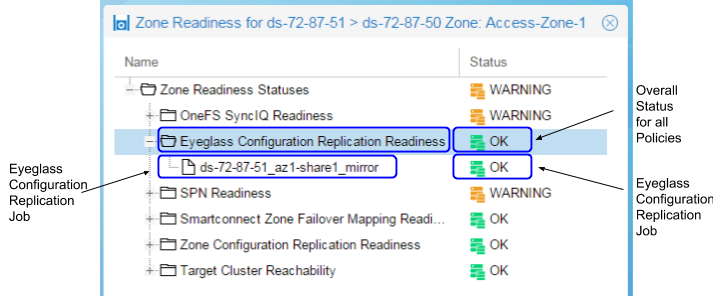

Zone and IP Pool Readiness - Eyeglass Configuration Replication Readiness

The Eyeglass Configuration Replication Readiness criteria is used to validate that the Eyeglass Configuration Replication jobs in the Access Zone have been successfully run, to sync configuration data for all policies members of the Access Zone. For each Eyeglass Configuration Replication Job in the Access Zone, the following check is performed:

| <Eyeglass Configuration Replication Job Name> | Validate that the Eyeglass Configuration Replication Job status is not in the ERROR state. |

Note: With both enabled and disabled Eyeglass Configuration Jobs in the Access Zone, the Eyeglass Configuration Replication Readiness validation will only display status for the Enabled jobs.

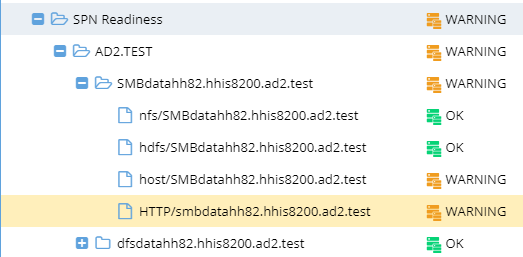

Zone and IP Pool Readiness - SPN Readiness

Prerequisites: some new validations require 2.5.6 or later for enhanced SPN management and failover.

The SPN Readiness criteria is used to:

- Detect missing SPNs and insert them into AD based on PowerScale's list of missing SPN's. Requires AD Delegation step to be completed to support auto insert feature.

- Remediate existing and newly created SmartConnect Zones as short and long SPN’s created for each cluster Active Directory machine account.

- (2.5.6 or later releases) Checks the Case of the SPN in Active Directory versus the Smartconnect zone name case. NOTE: SPN's are case sensitive and must match the case of the cluster SmartConnect name or alias. For example HOST\Data.example.com and HOST\data.example.com are different and must match for correct kerberos authentication, and failover requires the case to match the PowerScale configuration. This validation will detect incorrect case.

- (2.5.6 or later releases) Checks if syntax is correct in AD (i.e host\xxxx is lower case and not the correct syntax). The service class must be upper case HOST\xxxx (i.e "host\xxxx" is invalid) for failover and authentication.

- (2.5.6 or later releases) Supports additional service classes for custom SPN insert into ad as well as failover support. The following SPN's are supported NFS, HDFS, WEB and any other custom SPN required for failover and automatic insertion. See Access Zone Failover configuration guide on how to enable custom SPN's.

This check is done for each domain for which each cluster is a member.

Note: For the case where the PowerScale Cluster is not joined to Active Directory, the SPN Readiness will show the following:

- For OneFS 7.2 the SPN Readiness check is displayed with message “Cannot determine SPNs”.

- For OneFS 8 the SPN Readiness check is not displayed in the Zone Readiness window.

New 2.5.6 or later releases

Each SPN that should be associated with the AD cluster computer object, based on the SmartConnect names or aliases, is displayed and shown as green "OK" if it matches case and SPN syntax. It will show warning for each SPN detected with incorrect case or syntax issue.

Example error shown when selecting a warning SPN entry will display in the bottom part of the validation UI.

- Warning for the lowercase SPN host/xxxxx

- Not valid SPN. Service class in the SPN definition should be HOST

- Not valid SPN. Service class in the SPN definition should be HOST

- Warning for the wrong case in the smartconnect name or alias

- Not valid SPN. SPN entries are case sensitive and should match the case used on the SmartConnet name.

- Not valid SPN. SPN entries are case sensitive and should match the case used on the SmartConnet name.

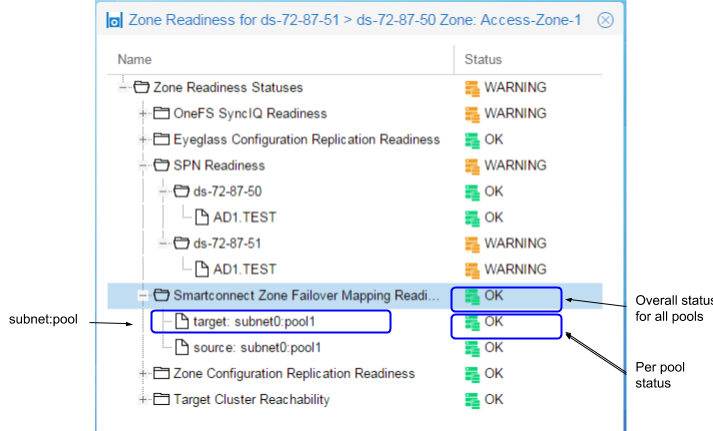

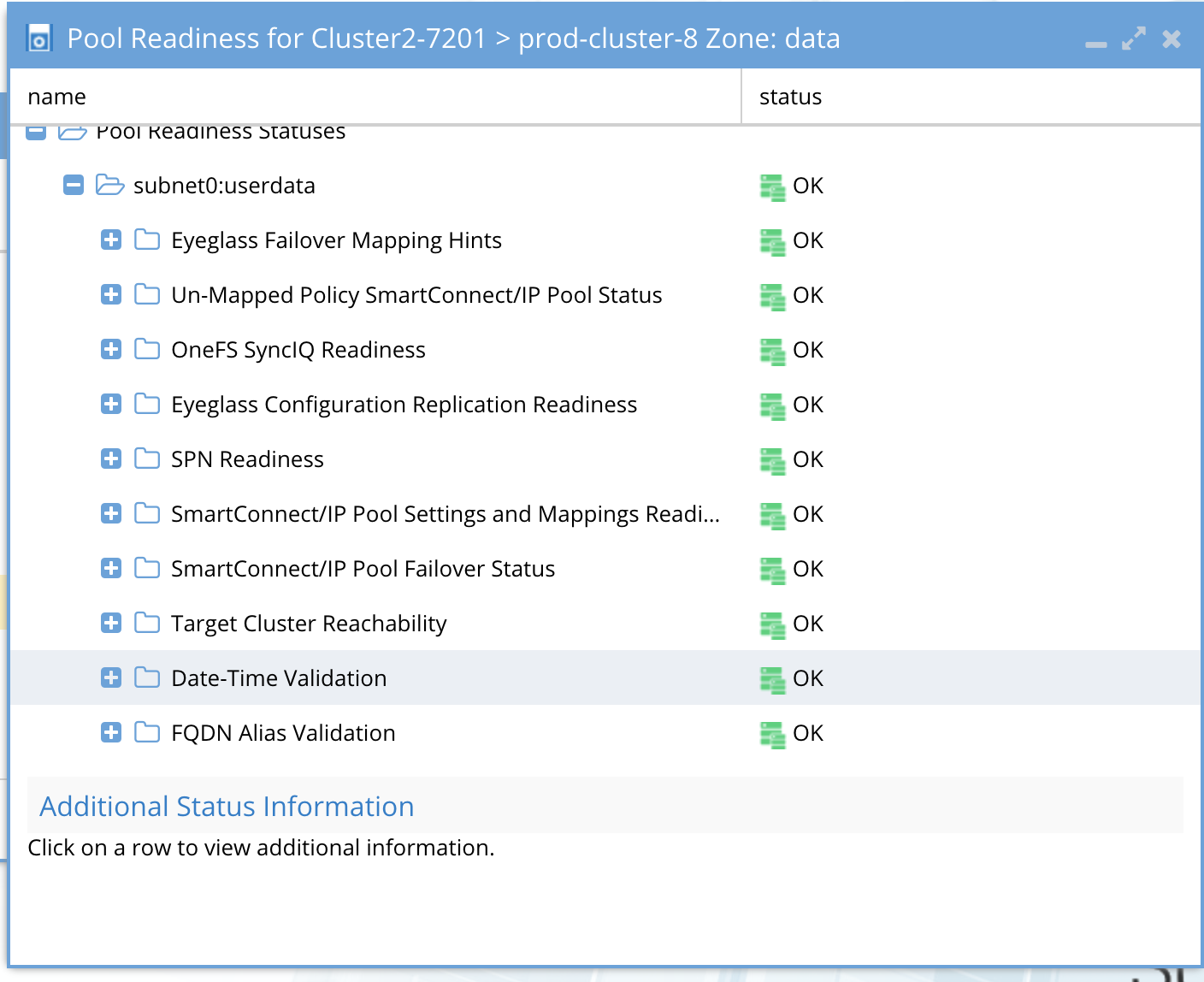

Zone and IP Pool Readiness - SmartConnect Zone Failover Mapping Readiness

The SmartConnect Zone Failover Mapping Readiness criteria is used to validate that the SmartConnect Zone alias hints have been created between source and target cluster subnet IP pools. This check is done for each subnet:pool in the Access Zone.

For details on configuring the SmartConnect Zone Failover Mapping Hints, please refer to the Eyeglass Access Zone Failover Guide.

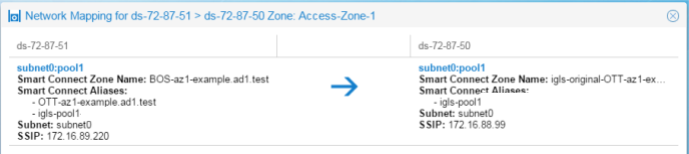

Use the Zone Readiness View Mapping feature to display pools in the Access Zone, and how they have been mapped using the SmartConnect Zone Alias hints.

Zone and IP Pool Readiness - View Mapping

Use the Zone Readiness View Mapping link to display the subnet:pool mappings with configured hints for the Access Zone.

Zone and IP Pool Readiness - Zone Configuration Replication Readiness

The Zone Configuration Replication Readiness criteria is used to validate that the Zone Configuration Replication jobs in the Access Zone have been successfully run to create target cluster Access Zones that don't already exist for configuration sync complete.

Zone and IP Pool Readiness - Target Cluster Reachability

The Target Cluster Reachability criteria is used to validate that Eyeglass is able to connect to the Failover Target Cluster using Onefs API.

Zone and IP Pool Readiness - Date-Time Validation

The Date-Time Validation is used to validate that the time difference between the cluster nodes, and between clusters and Eyeglass are within an acceptable range that will not affect SyncIQ operations. SyncIQ commands like re-sync prep can fail if the time between cluster nodes is greater than the time between the Eyeglass VM and the cluster with regards to latency of issuing the API call (a scenario where a node returns a timestamp for a step status message is earlier than the beginning of the Re-sync prep request). API calls can return different completion times between clusters. Differences here can cause re-sync prep failover commands to fail, if the difference between Eyeglass and the source cluster is greater than the time it takes for a resync prep command to complete.

NOTE: This condition under which timing differences cause resync failover commands to fail is rare and hard to detect manually. In release 1.8 Eyeglass can detect this condition. It's best practice to use NTP on clusters and the Eyeglass appliance. This allows failover logs and the new feature in release 1.8 or later to collect cluster SyncIQ reports for each step and append to the failover log. This will make debugging multi step multi cluster failover simpler. This process will require time to be synced.

For each Cluster the following checks are done:

Zone and IP Pool Path Validation

Zone Path Validation provides the status of Access Zones. Status of OK indicates that the Access Zone paths have no conflicts. Status of ERROR indicates that this Access Zone collides with another Access Zone's path.

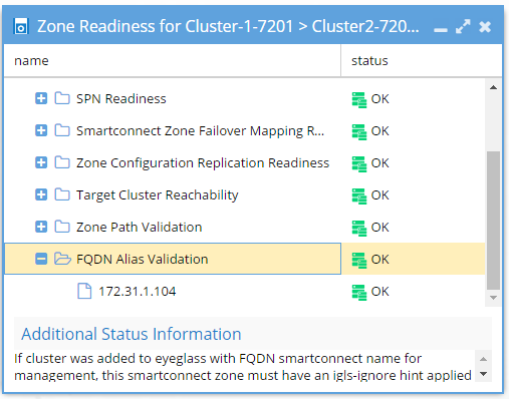

Access Zone and IP Pool FQDN Alias Validation

If cluster was added to Eyeglass with FQDN SmartConnect name for management, this SmartConnect Zone must have an igls-ignore hint applied to avoid a failover impacting Eyeglass access. Error indicates that no igls-hint was found on the IP pool for the SmartConnect Zone used for cluster management. OK indicates that igls-ignore hint was found.

Access Zone and IP Pool - SPN (Service Principal Name) Active Directory Delegation Validation

Prerequisite: 2.5.6 or later

Documentation to Correct warnings

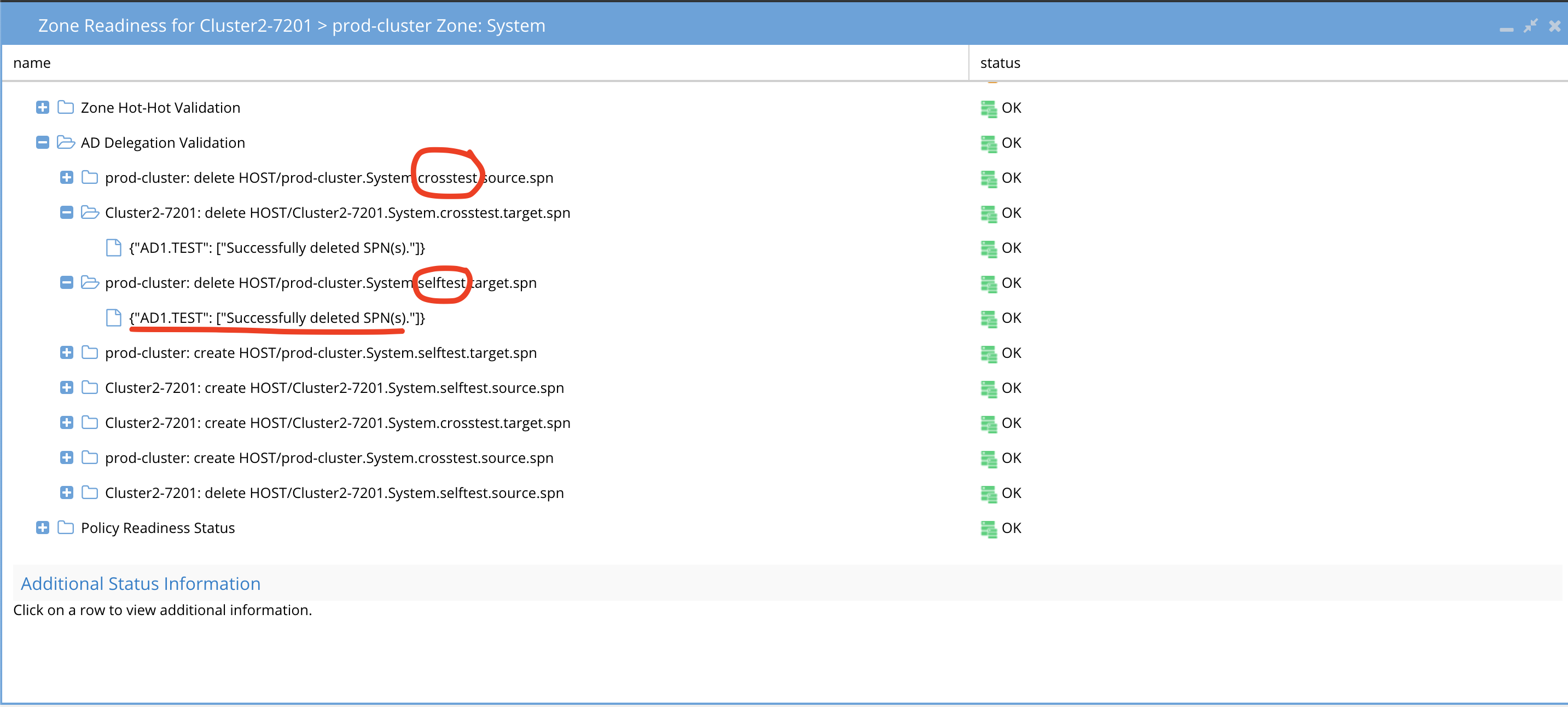

The SPN AD Delegation Validation will test SPN create and delete along with cross delegation between computer objects in AD. This test ensures the AD delegation step is completed. SPN's are used to authenticate SMB clients and SPN's are the same as SmartConnect Zone names and aliases with a host\smartconnect name syntax. These must be present on the cluster AD computer object that is currently the writable cluster to authenticate users. AD domain controllers only allow a computer object to validate passwords for a service if the SPN list on the AD computer object lists the SPN for the request. Client machines send the SPN with the password for validation. For example "\\data.example.com\shareA" the SPN is "HOST\data.example.com" and must be registered against the cluster computer object in AD in the SPN property list.

This test will create and delete test SPN's against the clusters AD object and the cross delegation to verify AD delegation is configured for failover. The cross SPN delegation allows one cluster to edit the opposite clusters SPN property list using AD permissions. This is required for a real DR event where the cluster that owns the SPN's is not operational, and requires the opposite target cluster to be able to modify and take ownership of the SPN properties. This validation automatically tests all required permissions and will indicate which test failed to allow an administrator to easily identify which Delegation permissions is missing.

See below how to disable this validation.

SPN AD Delegation Example

In the example below you can see the create and delete tests against self, the computer object matching the cluster the test was executed against, and cross test which is the opposite cluster computer object. All tests must pass to be ready for failover. If a test fails you can use the test name to determine which AD permission is missing.

SPN test Failure Conditions and Error Messages

Use this table to identify which test failed and the error message to determine the root cause.

| Cause | Impact | Error message | Missing on both |

| Missing sudoer permission for isi_classic auth ads* on one cluster.image: On PowerScale | Alarm(Major), Readiness: AD Delegation ERROR for affected cluster. | {"AD2.TEST": ["Error processing SPNS: Sorry, user eyeglass is not allowed to execute '/usr/bin/isi_classic auth ads spn create -s HOST/is81to82A.Data.crosstest.target.spn --machinecreds --account IS81TO82B$ -D AD2.TEST' as root on is81to82A-1."]} | |

| Missing AD Delegation for SELF test for one cluster.image: On AD | Alarm(Major)Readiness: AD Delegation ERROR for self tests of the affected cluster. | Create: {"AD2.TEST": ["Error processing SPNS: LdapError: Failed to modify attribute 'servicePrincipalName' [50:Insufficient access]"]} Delete:{"AD2.TEST": []} | |

| Missing AD Delegation for CROSS test for one cluster. image: On AD Note: Cross Test warning for clusterA check the AD cross delegation for clusterB whether clusterB has configured cross delegation permission for clusterA | Alarm(Major)Readiness: AD Delegation ERROR for cross tests of the affected cluster. | Create: {"AD2.TEST": ["Error processing SPNS: LdapError: Failed to modify attribute 'servicePrincipalName' [50:Insufficient access]"]} Delete: {"AD2.TEST": []} |

Access Zone and IP Pool - DNS Dual Delegation Validation

Prerequisite: 2.5.6 or later

Documentation to correct Warnings

UnSupported Configurations

- If Infoblox is configured using forwarders and not dual name servers this validation will not work. Forwarders is vendor specific configuration and not standards based DNS. Nslookup cannot remotely validate dual forwarding configuration. Recommendation is to use name servers with Infoblox versus dual forwards. This validation will need to be disabled if Infoblox dual forwarding is configured. Open a case with support.

- NOTE: Eyeglass will validate the groupNet DNS servers directly and will not use the OS DNS configured on Eyeglass, this is because the DNS servers that must be configured are the ones used by PowerScale itself. This requires the Eyeglass VM to have port 53 udp access to the groupnet DNS servers. If this is not possible, the validation must be disabled. See the section be

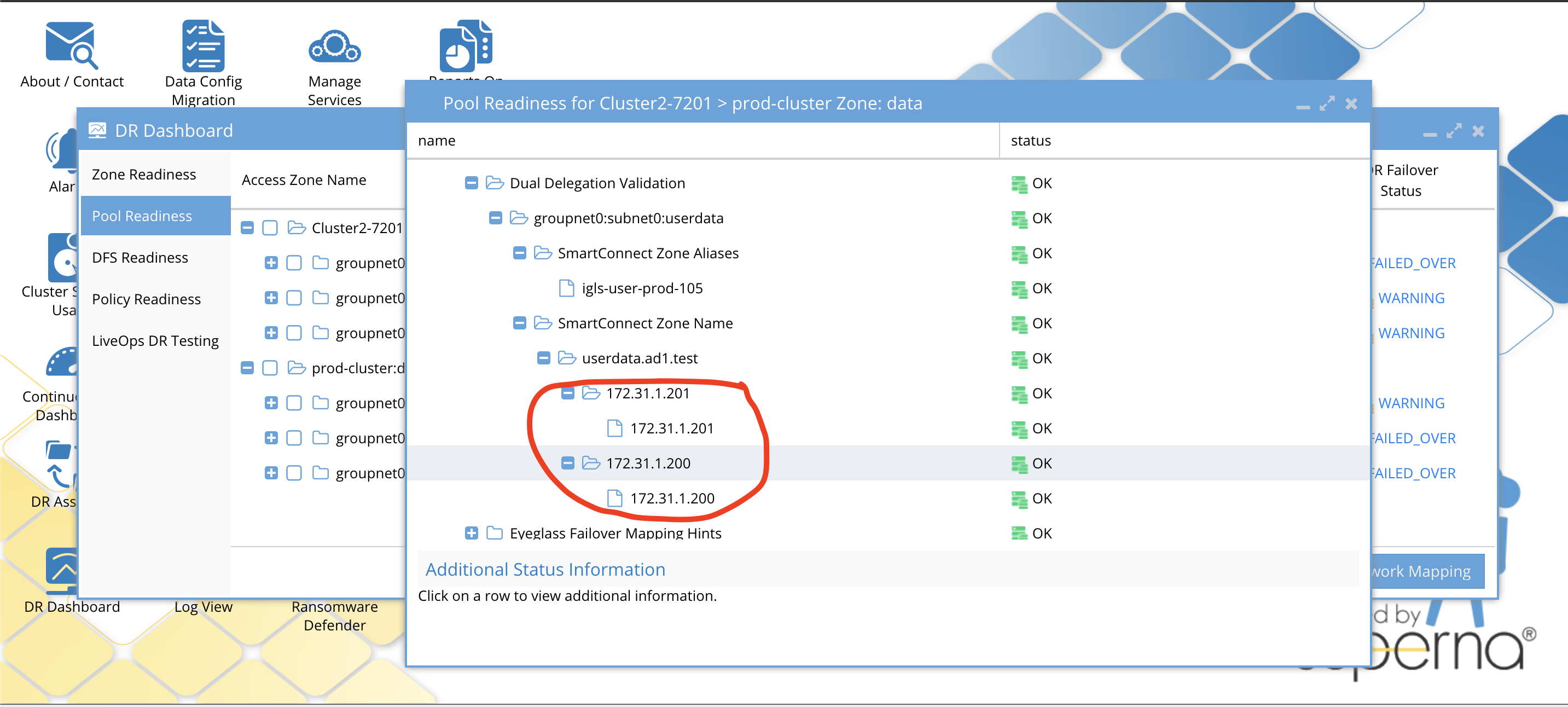

This validation will automatically validate each SmartConnect name and alias on pools has two name servers configured, and that the ip address returned is a subnet service ip that is servicing the IP pool by a subnet with the correct SSIP. If any of these tests fail it means failover will not be able to auto the DNS step leaving it a manual step. This will detect misconfiguration or missing dual DNS delegation before planned failovers. If A records are used in the delegation, the DNS name returned as the name server will have a reverse lookup done to validate the IP is a subnet service ip. This validation will also make sure the cluster pairs configured for failover have the correct SSIP on both clusters and can be found in inventory for the 2 clusters.

Example Dual DNS validation

Warnings and Statuses for all Possible Dual Delegation Validations

Pool with ignore alias igls-ignore-

Zone or pool with correct settings:

SmartConnect Zone name/alias unknown:

One NS record for SmartConnect Zone name/alias:

Detected three NS records for SmartConnect Zone name/alias:

One of the NS record is incorrect for SmartConnect Zone name/alias:

There is no NS records for SmartConnect Zone name/alias:

Two NS records point to same IP addresses for SmartConnect Zone name/alias:

SSIP of source cluster (in cluster) is incorrect:

All Failover type Domain Mark Validation

Prerequisite: 2.5.6 or later

Documentation to correct Warnings

- Consult Dell Documentation to run domain mark job

This will validate that source and target cluster have a valid domain mark for accelerated failback. This will raise a warning if either cluster source or target is missing a domain mark. This is an important validation to ensure failovers do not take a long time to wait for domain mark to run during resync prep step.

The domain mark needs to be run manually on the cluster with Onefs job UI if the validation fails. This should always be completed prior to any planned failover. All policies are checked for this validation.

IP Pool Failover Readiness

This interface shows each Access zone and the IP pool defined within the access zone. Expanding each pool will show the SyncIQ policies that are mapped to the pool.

- The Access zone column shows cluster:zone name.

- Pool mapping will show the pool to pool igls-hints that map a pool on source cluster to target and allows viewing the mapping

- Target cluster this pool will failover too

- Last Successful Readiness Check is the day and time that Failover readiness Assessed this pool readiness

- Map policy to pool allows mapping a policy or more than one policy to a pool and allows viewing the mapping for all pools in the access zone.

- DR Failover Status shows the highest severity state for all validations OR it will show failed over status if the pool has been failed over.

Pool Validations

The pool validations are the same as Access Zone readiness with the key difference being they only apply to the pool itself and not the whole zone. This allows a single pool to be viewed and ready for failover independently.

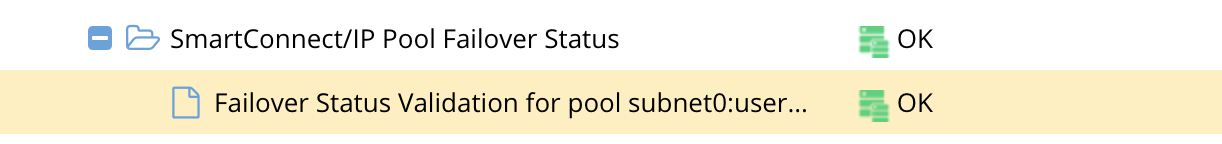

Pool Readiness Validation

The Pool Readiness validation that is unique to IP pool failover is the un-mapped policy smartconnect/ip pool status. and the overall pool readiness that summarizes the pools status.

Un-mapped policy validation Overview

- This verifies that all synciq policies in the zone have been mapped to a pool.

- A pool may have more than one SyncIQ policy mapped.

- A syncIQ policy may NOT be mapped to more than one pool.

- Any SyncIq policy not Mapped using the Dr Dashboard IP pool mapping interface, will raise this error message and WILL block failover for all pools in the access zone until corrected.

Overall pool validation status

How To Configure Advanced DNS Delegation Modes Required for Certain Environments

What's new

- 2.5.7 adds the ability to detect DNS dual delegations above the smartconnect zone name level in the DNS name space. example smartconnect data.example.com is the smartconnect name but the delegation is done at the example.com level in DNS. Now the validation will attempt to locate the delegation above the smartconnect level to locate the dual delegation. If this validation scans all DNS names and finds no dual name server entries it will result in a failed validation.

- additionnal logging shows all steps in the validation located here /opt/superna/sca/logs/readiness.log

- additionnal logging shows all steps in the validation located here /opt/superna/sca/logs/readiness.log

- If Eyeglass has no access to reach the groupnet DNS due to firewall then enable local OS DNS option below

- If your DNS is Bluecat, bind or Infoblox we recommend disabling recursive lookups and use the option below to disable recursive lookups.

- Combine both values if both scenarios apply

How to change the values

- ssh to eyeglass as admin

- sudo -s (enter admin password)

- nano /opt/superna/sca/data/system.xml

- Find the readinessvalidation tag and add the tag below with the settings below to change the setting required for your situation, only add the tag if you want to change to the settings below.

- control+x to save and exit

- systemctl restart sca (for the changes to take effect)

- done

How the tags work

- if dualdelegation_use_eyglass_dns is true, instead of taking the DNS server from the isilon's groupnet, get the DNS server from the local OS, and issue the dual dns delegation validation request(s) to that DNS server instead.

- If it's false, continue to issue requests to the isilon's dns server on the groupnet (default mode).

- if dualdelegation_recurse is false, turn off recursion on the DNS query.

- if true DNS query will use recursive lookups and this is the default mode.

How to Configure Advanced SPN delay mode for Active Directory Delegation

- This advanced mode can be used to add a delay between the SPN create and delete test used during the validation. If AD domain controllers do not execute the create and delete fast enough this can fail the valiation test. This will add a delay in seconds between the commands. Default is no delay.

- ssh to eyeglass as admin

- sudo -s (enter admin password)

- nano /opt/superna/sca/data/system.xml

- Insert the readinessvalidation tags add the value below and change the 0 to 5 seconds.

- Then control + X to save and exit the file.

- systemctl restart sca (for the changes to take effect)

<readinessvalidation> <spnCreateDeleteWait>5</spnCreateDeleteWait> </readinessvalidation>

How to Disable AD or DNS Delegation Validations - Advanced Option

In some cases it may be required to disable these validations in some environments. If Infoblox is used with forwarding the DNS dual delegation will need to be disabled. In other cases, SPN's are not required and AD delegation is not completed. This option is global and will disable these validations from executing on all access zone's and pools.

- Login to Eyeglass as admin using ssh

- Disable DNS validation

- igls adv readinessvalidation set --dualdelegation=false

- Disable SPN test Validation

- igls adv readinessvalidation set --spnsdelegation=false

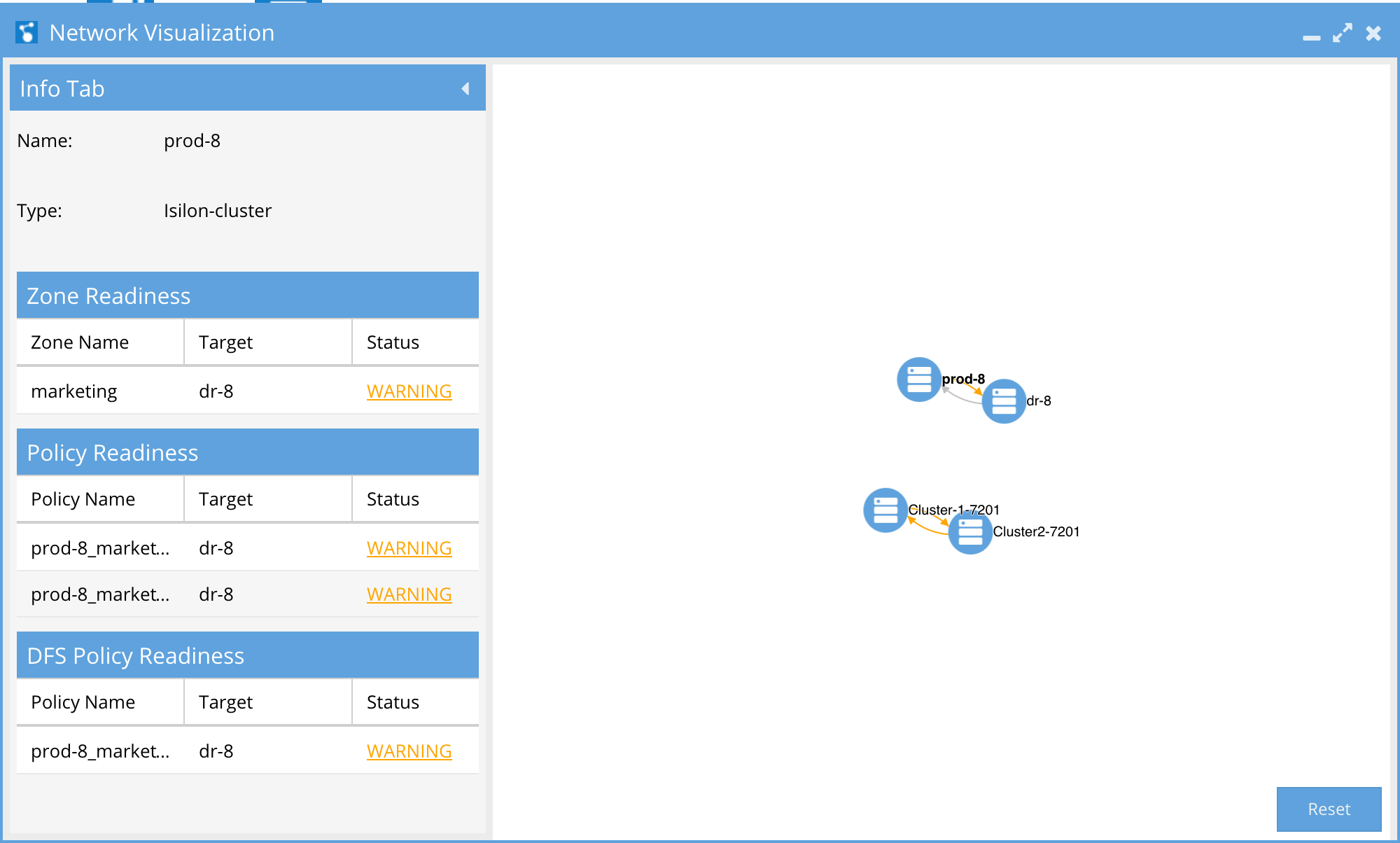

Network Visualization

A new way to view PowerScale clusters, DR status, and jump to the DR Dashboard. The Network Visualization feature allows you to visualize DR and cluster replication. This first release offers the first in several enhancements aimed at visualizing data, data flows, and storage across one or more PowerScale clusters.

This view indicates which clusters are replicating to each other and direction. For each cluster, failover readiness status for all failover types is summarized. Any failover readiness error will show as a red arrow from the source to target cluster (failover direction). Warnings are displayed with an orange arrow. In the case where there are not errors or warnings the arrow will be green for active replication direction (failover direction) and Grey for the failed over (inactive) direction. This simplifies monitoring many clusters replicating.

To view Network Visualization:

- Open the Icon.

- Zoom in or out to navigate depth of view.

- Click and hold to drag the visual view objects.

- Click a cluster to get view of active Sync Data on the cluster viewed by Failover mode and status.

- Click on hyperlink to hump to DR Dashboard Directly from the Network Visualization window.