Application Autonomous Failover Guide

- Read Me First

- Overview

- A Modern Approach to Storage Access Failover

- Dell PowerScale Synchronized Storage Systems

- Superna Eyeglass DR

- Superna Eyeglass DR API Features

- Continuous Application Paired Failover Monitoring Logic

- Basic Autonomous Application Failover Solution

- Advanced Autonomous Application Failover Solution

- Overview

- Solution Components

- Solution Capabilities Description

- Example only: Web Server with Load Balancer Failover Case Scenario

- Configuration

Read Me First

The sample code provided here is Superna IP and can be used "as is" without warranty or support. The autonomous application failover service and support package is required for a supported solution.

Overview

A Modern Approach to Storage Access Failover

The uptime and availability of your customer, partner and internal team facing tools and applications is critical to your success. You have redundant, resilient synchronized storage systems, you have federated application based storage paths, so why not have automated application failover? Conventional continuous availability solutions involve large expenditures for expensive hardware and licensing to continuous path transparent access to data.

This solution leverages Superna Eyeglass for autonomous failover automation, the Superna Eyeglass DR Failover API, Dell PowerScale for data synchronization and DNS delegation, and a provided monitoring script to be placed alongside the application.

Technologies:

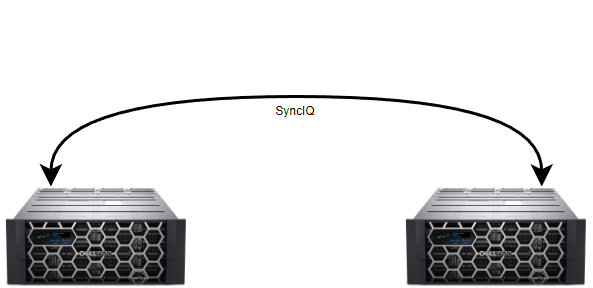

Dell PowerScale Synchronized Storage Systems

Dell scale out NAS solution, PowerScale is your storage platform. Its SyncIQ data replication software provides flexible asynchronous data replication between a pair or more of arrays for disaster recovery and business continuity.

Superna Eyeglass DR

Superna Eyeglass DR maintains a continuously updated database of PowerScale shares, exports, NFS aliases, deduplication settings, schedules, policies and more. All the information normally stored in manual DR recovery playbooks along with the ability to automate failovers faster through parallel procedure execution.

Superna Eyeglass DR API Features

Did you know that Superna Eyeglass DR includes a robust API system? The Eyeglass DR API provides the means to execute path based failovers through secure remote command execution, allowing automation to monitor and autonomously initiate failovers and reversions. Superna Eyeglass DR API provides DR readiness status, and can initiate and monitor failover tasks

Continuous Application Paired Failover Monitoring Logic

Lightweight path monitoring logic monitors read, write or read/write storage path access. Configurable continuous failover detection logic logs and alerts administrators of storage path failures, alerts on failover requests and status, and initiatives autonomous failover via the Superna Eyeglass DR API.

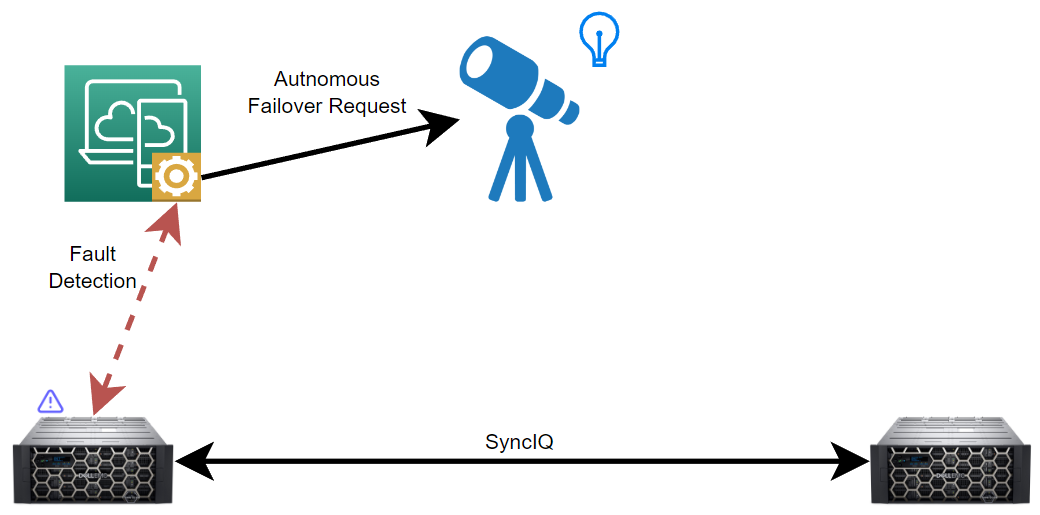

Basic Autonomous Application Failover Solution

A small, lightweight monitoring script is installed alongside your applications server. Access (r/w) to the application path is continuously monitored while your application processes data. The monitor sends a failover request to Superna Eyeglass DR if loss of access is detected.

Superna Eyeglass DR processes the request, computes the failover execution steps then autonomously initiates a failover from primary to synchronized failover storage paths. Storage administrators and vested parties are notified of the event and are able to remediate the primary storage path and then fail back from failover to primary.

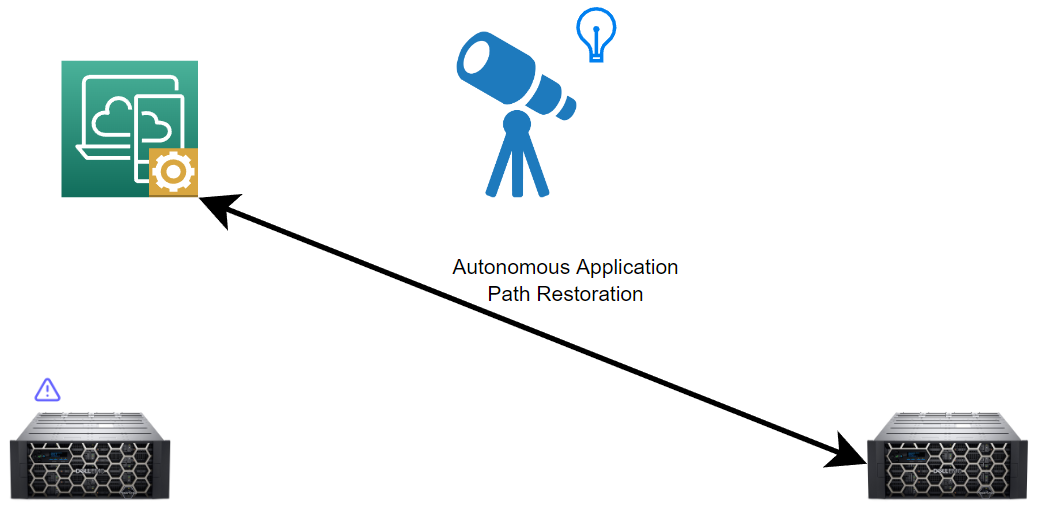

Advanced Autonomous Application Failover Solution

This option enhances the basic autonomous failover solution to include a dual failure plane detection function to monitor the application front end application health and fails over both storage and application to the opposite data center. This is the best design to ensure an application servers data is local i..e same data center. You never want to design a solution that allows for one failure plane to fail over causing a stretch application IO pattern. This is complicated to debug, impacts application performance in failure state and has many more failure use cases to detect and validate.

Overview

The example configuration and code examples below enables a dual failure detection plane solution. This means the autonomous code solution can detect a failure in the storage or the application using a load balancer. If a failure in either plan occurs both the application and the storage failover together as a single unit of failover. This solution ensures the data is always local to the application server and 2 critical health check monitoring points are covered.

Solution Components

- Eyeglass DR

- Eyeglass API

- Progress Software Kemp virtual or physical load balancer

- Email server for alarms

Solution Capabilities Description

- Storage Failover of NFS or SMB shares

- IP pool failover mode recommended

- Dual failure plane detection with application failover (application and storage)

- Failover and fail back logic

- email alarm status of decisions to failover, failover status, failover errors

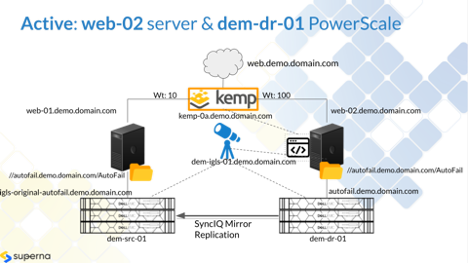

Example only: Web Server with Load Balancer Failover Case Scenario

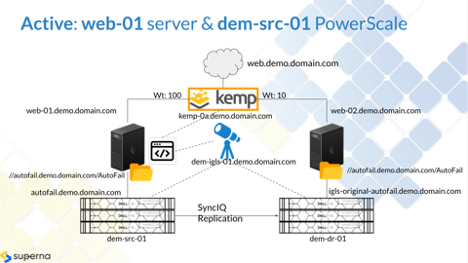

NOTE: This is only a simple example of how a load balanced application can be configured. Purchased solutions can integrate with other applications.

Configuration

The Load Balancer is configured to have a Global Balancing managed FQDN with direction to the Primary Web Server (Web-01)and Secondary Web Server (Web-02) with priority set to Primary Web Server (Higher weightage).

For example:

Global Balancing Managed FQDN: web.demo.domain.com

Fixed weightage configuration:

IP Address of Web-01 : 100

IP Address of Web-02: 10

Users will access the web server through the Global balancing managed FQDN (web.demo.domain.com). During normal condition it will be directed to the Primary Web Server (Web-01) as it has the fixed weightage higher than Web-02

A running code is implemented for continuously verifying readability of the checker file on the Primary Web Server that is accessed through the mounted SMB Share from the Primary Production PowerScale cluster (dem-src-01).

This checker file (demotest.html) is located under the mounting path (e.g. /mnt/autofail/www/root/ mounted from dem-src-01)

The running code will keep monitoring until it detects failure (e.g. two consecutive failures), then it will send an e-mail to alert and perform autonomous Failover

The autonomous Failover includes:

Failover Storage from dem-src-01 to dem-dr-01, move smartconnect zone name from dem-src-01 to dem-dr-01 cluster (Eyeglass DR executes Access Zone Failover)

Pre-Failover unmount SMB Share on both Web-01 and Web-02 and Post-Failover re-mount SMB Share on both Web-01 and Web-02 from active PowerScale cluster through SmartConnect Zone name autofail.demo.domain.com (Eyeglass DR Failover executes Pre and Post Failover script)

Disable Web-01 from Global Managed FQDN

The end result:

Web-02 is activated and user access web.demo.domain.com is directed to Web-02

Storage active from dem-dr-01 PowerScale cluster

Once the Web-02 becomes the primary server to server Web access, then we can start the running code on WebServer-2 that continuously check the accessibility of this Web-02 by verifying readability of the checker file that is accessed through the mounted SMB Share from the Secondary Production PowerScale cluster (dem-dr-01).

This checker file (demotest.html) is located under the mounting path (e.g. /mnt/autofail/www/root/ mounted from dem-dr-01)

The running code will keep monitoring until it detects failure (e.g. two consecutive failures), then it will send an e-mail to alert and perform autonomous Failover (Failback).

The autonomous Failback includes:

Failback Storage from dem-dr-01 to dem-src-01, move smartconnect zone name from dem-dr-01 to dem-src-01 cluster (Eyeglass DR executes Access Zone Failover from dem-dr-01 to dem-src-01)

Pre-Failover unmount SMB Share on both Web-01 and Web-02 and Post-Failover re-mount SMB Share on both Web-01 and Web-02 from active PowerScale cluster through SmartConnect Zone name autofail.demo.domain.com (Eyeglass DR Failover executes Pre and Post Failover script)

Enable Web-01 from Global Managed FQDN

The end result:

Web-01 is activated and user access web.demo.domain.com is directed to Web-01

Storage active from dem-srcr-01 PowerScale cluster