- Solution Overview

- Solution Components

- Introduction

- Configuration

- High Level Steps Overview

- Configure ECA Log Archiving

- PowerScale NFS Export Configuration

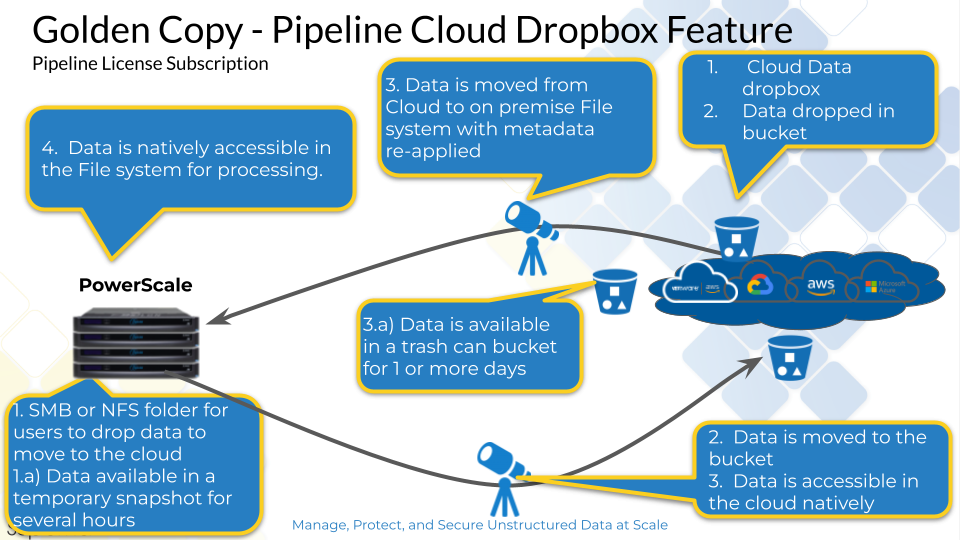

- Data Orchestration (Golden Copy) Dropbox Archive Configuration

Solution Overview

Many industry verticals require data access audit logs to be retained for compliance and security best practises. HIPAA, MPAA, PCI, Fedramp and many other standards are all require a auditing to comply with these standards. In order to reduce the cost of long term storage of audit data and immutability requirements it is necessary to archive audit data to long term storage such as S3 object storage. Cloud providers (Amazon, Microsoft and Google) all offer low cost object storage with immutability options to store long term audit data.

The Easy auditor (Security Bundle) capability offers continuous archive to S3 solution that extracts real-time ingested audit data, formats it with user friendly field definitions in CSV format and stores the data on a centralized NFS export external to the ECA virtual machines. Each of the ECA VM's can archive audit data for a scale out high performance solution. The Audit data will be rolled over and compressed in gz format to reduce the space requirements and files will be named with the date and time they were created. This audit data can now be moved to S3 with Superna's Data Orchestration platform (previously named Golden Copy).

Solution Components

- Security Subscription or Easy Auditor product license

- Data Orchestration Subscription or Golden Copy product license

- Dropbox feature license requires Pipeline Golden Copy license key

Introduction

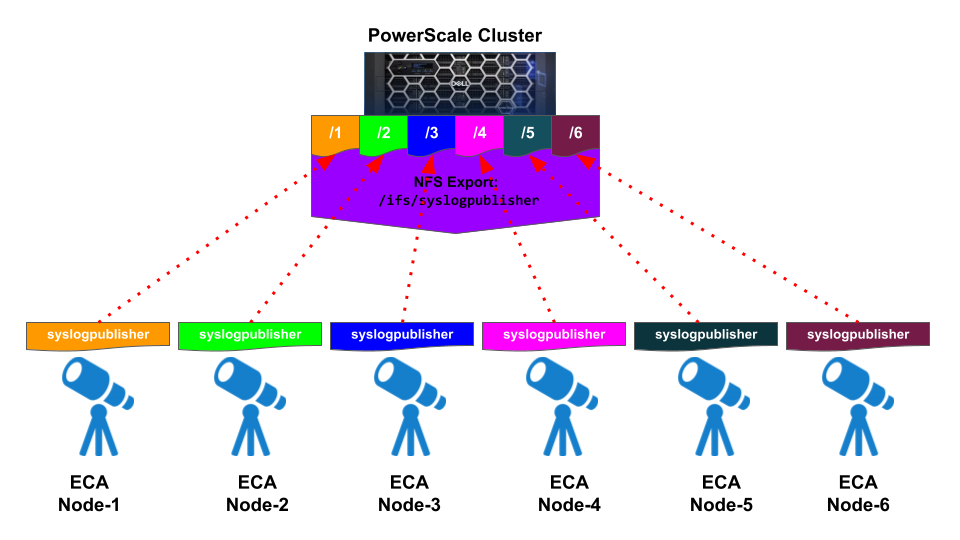

This document is for configuring audit events to be forwarded from Eyeglass Cluster Agent (ECA) nodes to External NFS Export. For this configuration, an additional docker container (syslogpublisher) is running on ECA nodes that consumes events and formats for syslog forwarding to an NFS mount.

The NFS Export is configured on the PowerScale Cluster. syslogpublisher docker container is configured as a Rolling File Appender type that will write the ECA audit events to the log files stored on the NFS mount.

Configuration

High Level Steps Overview

- Configure Syslog forwarding to NFS mount option on ECA cluster nodes and start the archive container

- Configure Golden Copy DropBox feature to move data to S3 bucket with archive tier configured.

- External to Superna products, configure immutable object lock feature on the S3 storage

Configure ECA Log Archiving

The following configuration is to configure all ECA nodes to forward audit events to external NFS export.

PowerScale NFS Export Configuration

Create NFS Export on the PowerScale in the System Access Zone with the IP Addresses of each ECA Nodes is added as the client and root client of that NFS Export

- ssh to PowerScale Cluster that will be used to receive the audit events syslog from ECA as root user

- Create the path for this syslogpublisher NFS export in the System Access zone. Example: /ifs/syslogpublisher

mkdir -p /ifs/syslogpublisher

- Create NFS export (Example for ECA with 6 nodes; replace <ECA_IP_#> with the IP address of the ECA node#)

isi nfs exports create /ifs/syslogpublisher --zone system --root-clients="<ECA_IP_1>,<ECA_IP_2>,<ECA_IP_3>,<ECA_IP_4>,<ECA_IP_5>,<ECA_IP_6>" --clients="<ECA_IP_1>,<ECA_IP_2>,<ECA_IP_3>,<ECA_IP_4>,<ECA_IP_5>,<ECA_IP_6>" --description "ECA Syslog Publisher Logs"

- Done

ECA : NFS Mount Configuration

- ssh to ECA node 1 as ecaadmin user

- Create new local mount directory and sync to all ECA nodes

ecactl cluster exec "mkdir -p /opt/superna/eca/logs/syslogpublisher"

- Add a new entry to auto.nfs file. NOTE: the <FQDN> should be a smartconnect name for a pool in the System Access Zone IP Pool. We can use NFSv3 or NFSv4

NFS v3

echo -e "\n/opt/superna/eca/logs/syslogpublisher --fstype=nfs,nfsvers=3,soft <FQDN>:/ifs/syslogpublisher" >> /opt/superna/eca/data/audit-nfs/auto.nfs

NFS v4.x

echo -e "\n/opt/superna/eca/logs/syslogpublisher --fstype=nfs,nfsvers=4,soft <FQDN>:/ifs/syslogpublisher" >> /opt/superna/eca/data/audit-nfs/auto.nfs

- Verify the auto.nfs file contents

cat /opt/superna/eca/data/audit-nfs/auto.nfs

- Push the configuration to all ECA nodes

ecactl cluster push-config

- Re-start Automount and verify the mount

ecactl cluster exec "sudo systemctl restart autofs" (note you will be asked to enter the ecaadmin password for each ECA node)

- With this autofs configuration, the cluster up command will read the mount file and mount on each ECA node during cluster up. To mount the nfs export now, run:

ecactl cluster exec "sudo mount -a -t autofs"

- Verify that it is mounted successfully

ECA : syslogpublisher Configuration

- ssh to ECA node 1 as ecaadmin user

- The original log4j2.xml file contains the setting format for forwarding audit events to external syslog server. We can move the original log4j2.xml file to log4j2.xml.original

ecactl cluster exec "mv /opt/superna/eca/conf/syslogpublisher/log4j2.xml /opt/superna/eca/conf/syslogpublisher/log4j2.xml.original"

- Create a new log4j2.xml file that has the setting for forwarding audit events as Rolling File Appender

touch /opt/superna/eca/conf/syslogpublisher/log4j2.xml

- Edit the new log4j2.xml file

vim /opt/superna/eca/conf/syslogpublisher/log4j2.xml

- Add the following lines to that log4j2.xml file.

Notes:

- Specify the roll over file size according to your requirement. Example if we want to set it as 100MB : <SizeBasedTriggeringPolicy size="100 MB"/>

- fileName="logs/${nodeID}/app.log" ⇒ the log will be written to sub-folder of nodeID with file name app.log - e.g logs/6/app.log for ECA node-6

- filePattern="logs/${nodeID}/$${date:yyyy-MM}/app-%d{MM-dd-yyyy}-%i.log.gz"> ⇒ pattern for the rolled over files, it will be archive compressed .gz with the file name pattern based on the dates. Example:

ls -l /opt/superna/eca/logs/syslogpublisher2/6/2023-10/

total 17

-rw-r--r-- 1 root root 4103 Oct 2 02:30 app-10-02-2023-1.log.gz

-rw-r--r-- 1 root root 3777 Oct 2 03:34 app-10-02-2023-2.log.gz

======

<?xml version="1.0" encoding="UTF-8"?>

<Configuration>

<Properties>

<Property name="nodeID">${env:ECA_THIS_NODE_ID}</Property>

</Properties>

<Appenders>

<Console name="STDOUT" target="SYSTEM_OUT">

<PatternLayout pattern="%highlight{%d %C{1}:%L %-5level: %msg%n%throwable}"/>

</Console>

<RollingFile name="RollingFile" fileName="logs/${nodeID}/app.log"

filePattern="logs/${nodeID}/$${date:yyyy-MM}/app-%d{MM-dd-yyyy}-%i.log.gz">

<PatternLayout>

<Pattern>%m%n</Pattern>

</PatternLayout>

<Policies>

<TimeBasedTriggeringPolicy />

<SizeBasedTriggeringPolicy size="100 MB"/>

</Policies>

</RollingFile>

</Appenders>

<Loggers>

<Root level="ALL">

<AppenderRef ref="RollingFile"/>

</Root>

</Loggers>

</Configuration>

==============

- Save the file

- The docker container does not start by default to start this container. Add the following lines to the docker-compose.overrides.yml file to start the container on all nodes.

vim /opt/superna/eca/docker-compose.overrides.yml

===================

version: '2.4'

services:

syslogpublisher:

labels:

eca.cluster.launch.all: on

==================

- save the file

- Edit docker-compose.yml file

vim /opt/superna/eca/docker-compose.yml

- Search for the syslogpublisher section and add the following line under sub-section volumes:

===

syslogpublisher:

…

volumes:

- "/opt/superna/eca/conf/syslogpublisher:/opt/superna/rda/conf:ro"

- "/opt/superna/eca/logs/syslogpublisher:/opt/superna/rda/logs:rw"

====

- Push the configuration to all ECA nodes

ecactl cluster push-config

- During push configuration it will show warnings related to permission denied. This will be also shown every time when we perform a push-config / cluster up procedure. This can be ignored as it is not impacting. Example of the warning:

/opt/superna/eca/logs/syslogpublisher: Permission denied

- [Optional] : to rectify that warning, we can run this command from ECA node-1 to give permission to that folder:

ecactl cluster exec "ecactl containers exec syslogpublisher chmod 755 logs”

- To start the container now on all ECA nodes:

ecactl cluster exec "ecactl containers up -d syslogpublisher"

- Verify that the NFS export is now populated with audit events syslog. Example: from this file: /opt/superna/eca/logs/syslogpublisher/6/app.log

Example log format

{"eventSource":"Isilon","eventTimeStamp":1696231916581,"eventCode":"0x80","path":"\\\\005056a4307405150965f31f79ddf8ce2430\\System\\ifs\\data\\smb02\\folder1\\file2.txt","protocol":"SMB2","server":"node001","clientIP":"xx.xx.xx.xx","userSid":"S-1-5-21-2130525082-3192732430-2112517702-1104","desiredAccess":"0","createDispo":"0","numberOfReads":"1","bytesRead":"2310","bytesWritten":"2640","ntStatus":"0","zone":{"id":"1","name":"System"},"cluster":{"id":"005056a4307405150965f31f79ddf8ce2430","name":"dg-isi73"},"eventExt":{"inode":"4295193704","userId":"1000002","fsId":"1"}}

{"eventSource":"Isilon","eventTimeStamp":1696231917844,"eventCode":"0x4","path":"\\\\005056a4307405150965f31f79ddf8ce2430\\System\\ifs\\data\\smb02\\folder1\\file2.txt","protocol":"SMB2","server":"node001","clientIP":"xx.xx.xx.xx","userSid":"S-1-5-21-2130525082-3192732430-2112517702-1104","desiredAccess":"1180063","createDispo":"1","numberOfReads":"0","bytesRead":"0","bytesWritten":"0","ntStatus":"0","zone":{"id":"1","name":"System"},"cluster":{"id":"005056a4307405150965f31f79ddf8ce2430","name":"dg-isi73"},"eventExt":{"inode":"4295193704","userId":"1000002","fsId":"1"}}

{"eventSource":"Isilon","eventTimeStamp":1696231917905,"eventCode":"0x80","path":"\\\\005056a4307405150965f31f79ddf8ce2430\\System\\ifs\\data\\smb02\\folder1\\file2.txt","protocol":"SMB2","server":"node001","clientIP":"xx.xx.xx.xx","userSid":"S-1-5-21-2130525082-3192732430-2112517702-1104","desiredAccess":"0","createDispo":"0","numberOfReads":"1","bytesRead":"2640","bytesWritten":"2970","ntStatus":"0","zone":{"id":"1","name":"System"},"cluster":{"id":"005056a4307405150965f31f79ddf8ce2430","name":"dg-isi73"},"eventExt":{"inode":"4295193704","userId":"1000002","fsId":"1"}}

Data Orchestration (Golden Copy) Dropbox Archive Configuration

- The dropbox feature will scan a folder and move all the data found to an S3 bucket. This will allow data to be collected during the day time and move this data to S3 on a daily basis to free up space on premise.

- The on premise to Cloud dropbox feature will be used

- example command to configure dropbox and store data in AWS S3 service with Deep Archive storage tier.

- searchctl archivedfolders add --isilon gcsource --folder /ifs/syslogpublisher --accesskey xxxx --secretkey yyyyyy --endpoint s3.<region name>.amazonaws.com --region <region name> --bucket auditarchive --cloudtype aws --delete-from-source --tier DEEP_ARCHIVE --type GC

- Once the folder is created, use the GUI to assign a full schedule on this folder definition to run at midnight daily.

- Test the dropbox job

- searchctl archivedfolders archive --id xxxx --follow

- Verify the /ifs/syslogpublisher is empty after the job completes (It is assumed that all previous steps were configured correctly and data was successfully archived to the NFS export used to store the Audit archive)

- Done

© Superna Inc