Golden Copy Overview

Overview

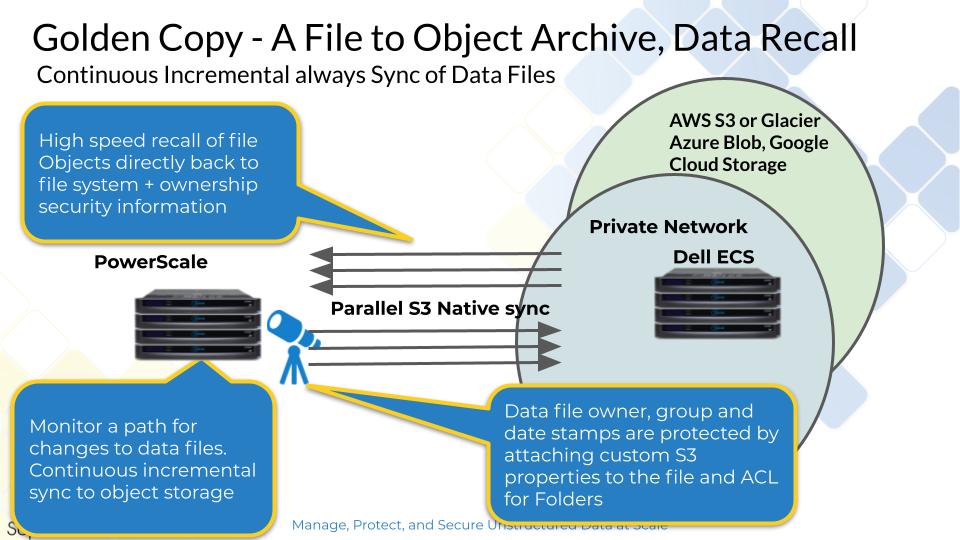

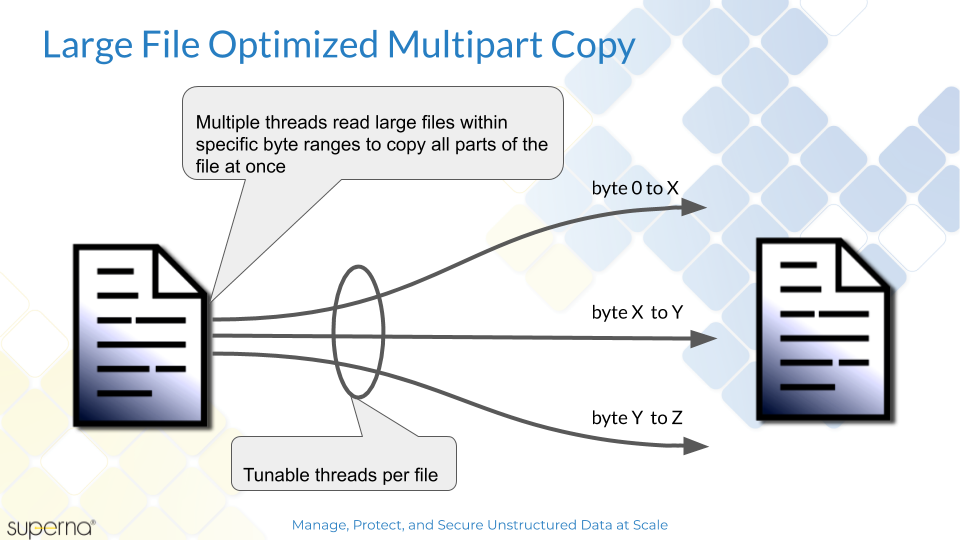

This product offers high speed file to object copy, and sync of files to native object store over the S3 protocol.

What's New

- The latest releases enables

- Onefs 8.1.2 or later changelist API folder rename support to ensure renames are synced to the object store in the correct path

- 2 factor login over ssh

- post job stats steps recomputes job view stats

- Incremental sync jobs auto retry failed files

- Coming soon

- QOS full vs incremental jobs - will prioritize one of the other. Global setting applies to all folders

- Release 1.1.9 Overview

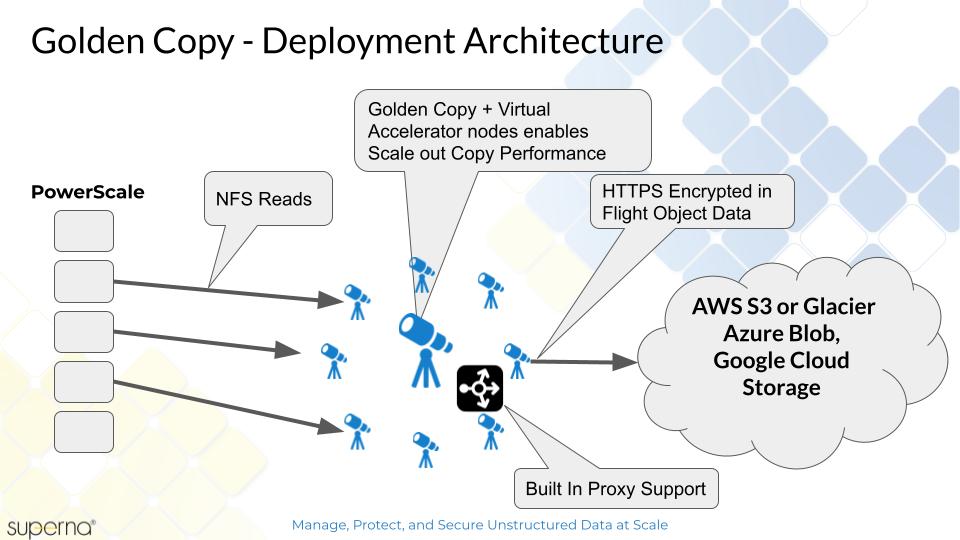

Deployment Diagram

Architecture

Target Use Cases:

Synced Backup Copy of Data with 1 version of a file

- Requires: Golden Copy backup bundle and Pipeline license subscription or Data Orchestration TB subscription

- Only 1 copy or version of a file, file modifications will overwrite the previous version of the object with the new object

- Higher probability of recalling data

- Storage tier is near line and assumes data recall is important with shortest possible time to recall data

- Deleted files are either synced as deleted objects OR turn off deletes and retain all deleted data inn the S3 storage

- No expiry set on the S3 target, each version of a file is retained

- Full copy followed by incremental schedule using Isilon change list api and snapshots for incremental always backup.

Synced Backup Copy of Data with N versions of a file

- Requires: Golden Copy backup bundle and Pipeline license subscription or Data Orchestration TB subscription

- Same as the above use case but allows S3 bucket versioning to be enabled. Specify how many versions of a file should be available at any one time.

- Example daily incremental and store 7 versions of a file provides 7 day restore window of data. The 8th day backup will automatically purge the oldest version of the file with a 7 version S3 bucket policy.

- NOTE: Versions consume the file size of the file.

- Object retention expiry would not be used in this use case.

- Version aware restore/recall of data is supported with the backup bundle version of Golden Copy and allows an older than or newer than flag to pick which version of the day you want to recall. Example restore data and version from Wednesday would select the closest match to Wednesday backup on a per object basis.

One Time copy of data for long term storage or archive

- Requires: Golden Copy backup bundle and Pipeline license subscription or Data Orchestration TB subscription

- Full copy of a path to long term object storage

- Low probability of recalling data

- Optional

- object lock retention applied

- tiering objects to low cost storage tier for long term retention

Data Archiving by User portal or administrator data age policies

- Requires: Golden Copy Archive Engine subscription license

- Capabilities:

- Offers end user Web portal for self service archive and recall to any cloud object storage or on premise Object storage

- Maintains SMB level security of user access to the archive

- Enables administrators to create old than data policies to automatically archive data into cloud storage.

- Benefits:

- Offers AWS Deep Archive S3 tier transparency feature to maximize cost savings of storing archive data. End users can place data into long term archive and recall this data with a simple web interface.

- AWS Storage cost calculator simplifies business case to archive data with AWS pricing api integration for real-time up to date pricing estimates for different AWS storage tiers.

Data Shuffle Workflow

- Requires: Golden Copy backup bundle and Pipeline license subscription or Data Orchestration TB subscription

- Capabilities:

- Just in time storage solutions allow data creation in a file system and move this data to the cloud to free up space for on premise analysis. This requires detection of newly created data and the ability to move this data into S3 to allow further cloud based analytics or archive

- Detect newly created files and move them to the cloud based on age criteria of the files

- Benefits:

- Reduce on premise storage costs for cold data while allowing for cloud analytics or long term archive of data for compliance retention requirements

Advanced Hybrid Cloud Workflows

- Requires: Golden Copy backup bundle and Pipeline license subscription or Data Orchestration TB subscription

- Capabilities:

- Hybrid cloud workflows require data to move or sync from on premise storage to S3 object and S3 object data to be moved to synced to on premise storage. These types of data flows need to automate data movement based on schedules or detection of new or changed data. Bi-directional data movement between S3 and file systems is enabled through Golden Copy Pipeline feature set.

- End user portal to on premise and cloud data repositories. Golden Copy Cloud Data Mobility feature empowers users to seamless see cloud data and on premise data in a simple WebUI allows them to securely move or copy data between cloud storage and on premise SMB file shares.

- Data separation using SMB security with integrated per user profiles allows administrators to enable a self service cloud on ramp to data created or copied to the cloud by business processes.

- Benefits:

- Empower end users to easily complete their data movement tasks while ensuring users do not see data they are not authorized to see in the cloud or on premise.

- Seamlessly combines S3 object security model with SMB share access to provide users a single pane of glass to manage data assets regardless of where the data is stored.

- Unburden storage administrators from data movement requests

- Provides global monitoring of user activity with full auditing and traceability of where corporate data is being moved , copied , when and by who.

- Reduce on premise storage costs for cold data while allowing for cloud analytics or long term archive of data for compliance retention requirements

Key Features

A PowerScale Integrated tool to sync a copy of a path(s) on PowerScale to an S3 storage bucket.

Uses PowerScale snapshot change list to support fast incremental syncs.

Direct Restore - Restore Data from S3 to PowerScale and re-apply file meta data (owner, ACL's, group, data stamps). PowerScale file structure is restored directly from S3 storage on to the file system without an out of band copy.

Redirected recall to a different cluster

Recall to a different folder path

Recall based on modified date range

Protects file metadata automatically with S3 metadata tags (ACL's, owner, group, data stamps etc).

MD5 checksum support for data integrity copies

File system to object store audit jobs

Bandwidth rate limiting per copy job

Sync mode or copy mode or both

Delayed Deletes to S3 copy or no deletes on source propagated to the target storage bucket - Recycle mode puts deleted files found during incremental sync and copies them to a special bucket to store deleted files. Setting a TTL on this bucket allows automatic purging on a differed schedule after files are deleted.

© Superna Inc