Administration Guides

Backup and DR for Audit Database with SyncIQ to a Remote Cluster

Home

- Overview

- How to Use SyncIQ to replicate and protect the Audit Database to a remote PowerScale Cluster

- How to Use the replicated copy of the Audit Database and Failover to the Warm Standby ECA Cluster

Overview

This solution backups the Audit Database to a remote cluster to provide a remote backup and DR copy at the same time.

How to Use SyncIQ to replicate and protect the Audit Database to a remote PowerScale Cluster

- Prerequisites

- Follow the ECA failover guide prerequisites and prepare the DR cluster before following this guide. Ensure all steps are completed in the prerequisites section of this guide.

- Create a SyncIQ Policy on the production cluster to replicate the audit database to a directory under the HDFS root directory with a replication schedule to the target DR cluster. Example:

- HDFS root directory: /ifs/data/igls/analyticsdb/

- SyncIQ Policy Source Path: /ifs/data/igls/analyticsdb/eca1/

- SyncIQ Policy Target Path on the remote cluster: /ifs/data/igls/analyticsdb/eca1/

- Recommended policy Schedule: once a day at noon, 7 days a week

- Complete the policy configuration name, description and target host property of the policy.

- Run the policy after it is created to copy the database

- Verify the policy completes successfully.

- Done

How to Use the replicated copy of the Audit Database and Failover to the Warm Standby ECA Cluster

This procedure assumes an ECA cluster will be deployed at the remote site to use the database copy and monitor the DR cluster after failover. See the ECA disaster recovery guide that covers how to configure a Warm standby ECA. All of the DR target cluster prerequisites and Scenario #2 Warm Standby steps are assumed to be completed. This section covers the steps to use the replicated copy of the database using the Warm Standby ECA cluster.

Procedure to Mount the Audit Database with the Warm Standby ECA:

- Use Eyeglass SyncIQ policy failover option with DR Assistant to failover the Audit Database policy. This will automate all steps for the SyncIQ policy and configure reverse replication. See DR assistant guide here.

- After the failover is successful, the DR cluster copy of the audit database will be writeable and reverse replication to re-protect the audit database.

- Bring up the Warm standby ECA Cluster at the DR site

- ssh to ECA master node (node 1)

- Login as ecaadmin

- Run command:

- ecactl cluster up

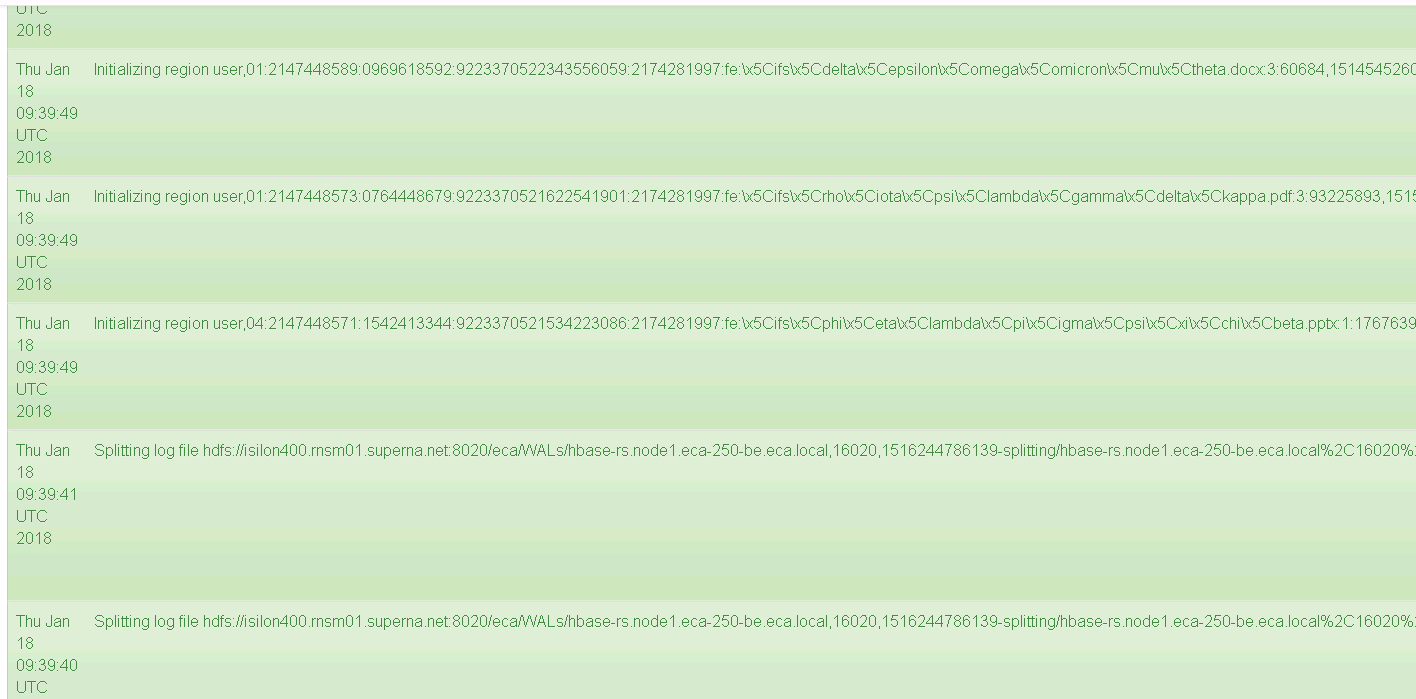

- NOTE: During cluster up uncommitted transactions are replayed to the database, this can be seen from the HBASE Region server GUI logs http://x.x.x.x:16030 (x.x.x.x is node 1 of the ECA). This will delay the cluster up process while the database replays transactions.

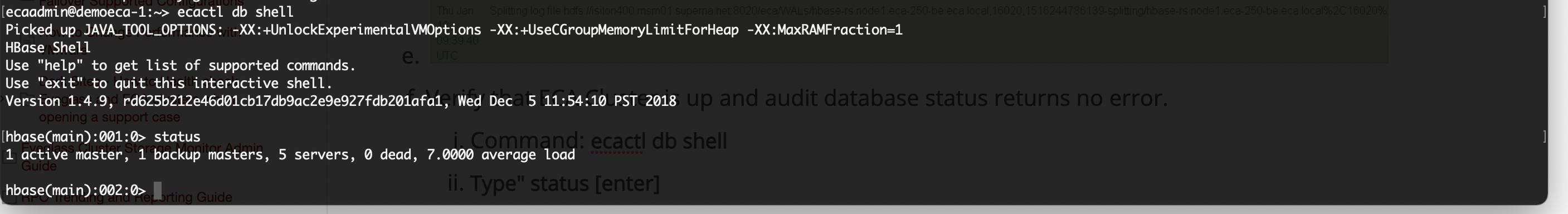

- Verify that ECA Cluster is up and audit database status returns no error.

- Command: ecactl db shell

- Type" status [enter]

- no error messages should be returned.